Deep learning is everywhere. 2016 was the year where we saw some huge advancements in the field of Deep Learning and 2017 is all set to see many more advanced use cases. With plenty of libraries out there for deep learning, one thing that confuses a beginner in this field the most is which library to choose.

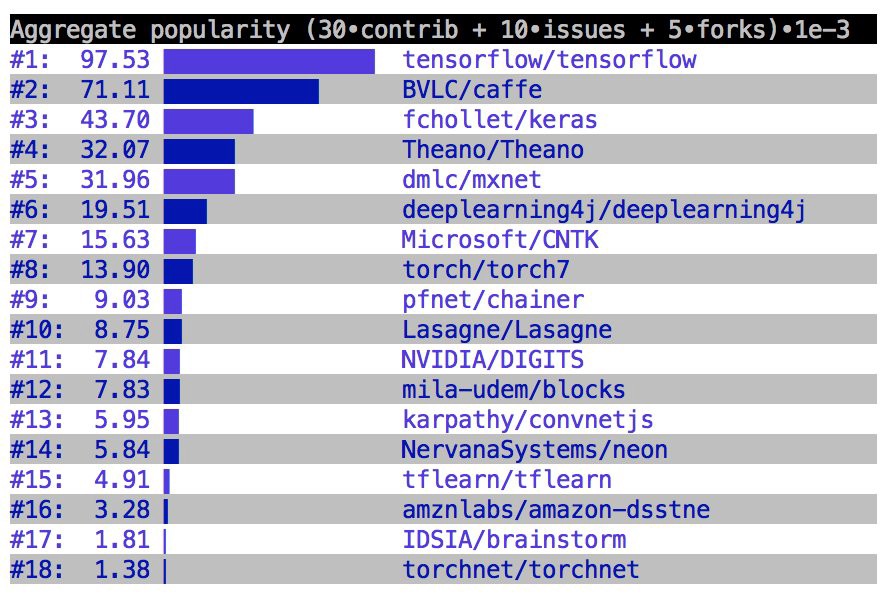

Deep Learning libraries/frameworks as per popularity(Source : Google)

Deep Learning libraries/frameworks as per popularity(Source : Google)

In this blog post, I am only going to focus on Tensorflow and Keras. This will give you a better insight about what to choose and when to choose either. Tensorflow is the most famous library used in production for deep learning models. It has a very large and awesome community. The number of commits as well the number of forks on TensorFlow Github repository are enough to define the wide-spreading popularity of TF (short for TensorFlow). However TensorFlow is not that easy to use. On the other hand, Keras is a high level API built on TensorFlow (and can be used on top of Theano too). It is more user-friendly and easy to use as compared to TF.

If Keras is built on top of TF, what?s the difference between the two then? And if Keras is more user-friendly, why should I ever use TF for building deep learning models? The following points will clarify which one you should choose.

Rapid prototyping

If you want to quickly build and test a neural network with minimal lines of code, choose Keras. With Keras, you can build simple or very complex neural networks within a few minutes. The Model and the Sequential APIs are so powerful that you can do almost everything you may want. Let?s look at an example below:

model = Sequential()model.add(Dense(32, activation=’relu’, input_dim=100))model.add(Dense(1, activation=’sigmoid’))model.compile(optimizer=’rmsprop’, loss=’binary_crossentropy’, metrics=[‘accuracy’])# Generate dummy dataimport numpy as npdata = np.random.random((1000, 100))labels = np.random.randint(2, size=(1000, 1))# Train the model, iterating on the data in batches of 32 samplesmodel.fit(data, labels, epochs=10, batch_size=32)

And you are done with your first model!! So easy!!

Who doesn?t love being Pythonic!!

Keras was developed in such a way that it should be more user-friendly and hence in a way more pythonic. Modularity is another elegant guiding principle of Keras. Everything in Keras can be represented as modules which can further be combined as per the user?s requirements.

Flexibility

Sometimes you just don?t want to use what is already there but you want to define something of your own (for example a cost function, a metric, a layer, etc.). Although Keras 2 has been designed in such a way that you can implement almost everything you want but we all know that low-level libraries provides more flexibility. Same is the case with TF. You can tweak TF much more as compared to Keras.

Functionality

Although Keras provides all the general purpose functionalities for building Deep learning models, it doesn?t provide as much as TF. TensorFlow offers more advanced operations as compared to Keras. This comes very handy if you are doing a research or developing some special kind of deep learning models. Some examples regarding high level operations are:

Threading and Queues

Queues are a powerful mechanism for computing tensors asynchronously in a graph. Similarly, you can execute multiple threads for the same Session for parallel computations and hence speed up your operations. Below is a simple example showing how you can use queues and threads in TensorFlow. Here is a snippet:

# Create the graph, etc.init_op = tf.global_variables_initializer()# Create a session for running operations in the Graph.sess = tf.Session()# Initialize the variables (like the epoch counter).sess.run(init_op)# Start input enqueue threads.coord = tf.train.Coordinator()threads = tf.train.start_queue_runners(sess=sess, coord=coord)try: while not coord.should_stop(): # Run training steps or whatever sess.run(train_op)except tf.errors.OutOfRangeError: print(‘Done training — epoch limit reached’)finally: # When done, ask the threads to stop. coord.request_stop()# Wait for threads to finish.coord.join(threads)sess.close()

Debugger

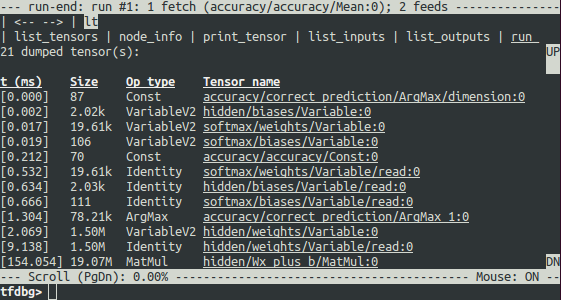

Another extra power of TF. With TensorFlow, you get a specialized debugger. It provides visibility into the internal structure and states of running TensorFlow graphs. Insights from debugger can be used to facilitate debugging of various types of bugs during both training and inference.

TensorFlow Debugger snapshot (Source : TensorFlow documentation )

TensorFlow Debugger snapshot (Source : TensorFlow documentation )

Control

In my experience, the more control you have over your network, more better understanding you have of what?s going on with your network.With TF, you get such a control over your network. You can control whatever you want in your network. Operations on weights or gradients can be done like a charm in TF.For example, if there are three variables in my model, say w, b, and step, you can choose whether the variable step should be trainable or not. All you need to put a line like this:

step = tf.Variable(1, trainable=False, dtype=tf.int32)

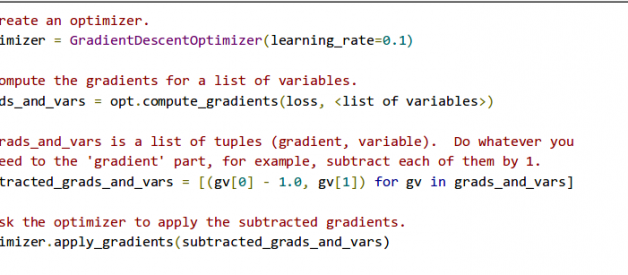

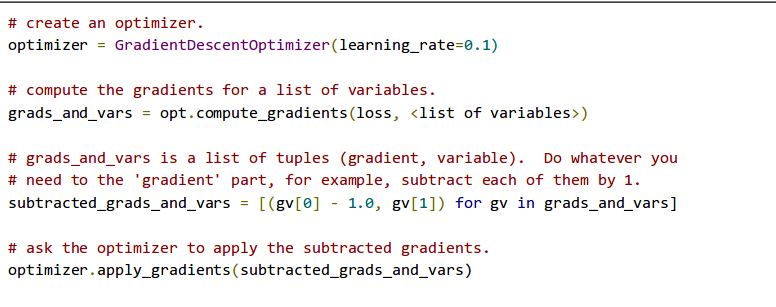

Gradients can give a lot of information during training. Do you have control over them too? Absolutely, check the example below:

Playing with gradients in TensorFlow (Credits : CS 20SI: TensorFlow for Deep Learning Research)

Playing with gradients in TensorFlow (Credits : CS 20SI: TensorFlow for Deep Learning Research)

Conclusion (TL;DR)

if you are not doing some research purpose work or developing some special kind of neural network, then go for Keras (trust me, I am a Keras fan!!). And it?s super easy to quickly build even very complex models in Keras.

If you want more control over your network and want to watch closely what happens with the network over the time, TF is the right choice (though the syntax can give you nightmares sometimes). But as we all know that Keras has been integrated in TF, it is wiser to build your network using tf.keras and insert anything you want in the network using pure TensorFlow. In short,

tf.keras + tf = All you ever gonna need

P.S. I wrote this article a year ago. There have been some changes since then and I will try to incorporate them soon as per the new versions but the core idea is still the same.