For a long time there?d been a project on my ideas-list that I?d been ignoring: create pornography using AI. A month ago I asked myself the question ?why am I not doing this??. And so I delved into a brave new world.

In this story I?ll tell you how that went. I?ll include a couple of synthetic-but-sort-of-graphic images. I?ll tell you what went well, and what didn?t. I?ll even throw in a bit of machine learning technology.

Part I: The premise

I?ve always been interested in cutting edge technology, and also in exploring the more exotic corners of the world. Initially a joke between me and some friends, ?make porn with AI? was a persistent idea that never fully faded away.

Pornography, as content, has properties that make it amenable to being synthesized. Whereas we seek out blockbuster films and TV shows with our favorite actors in them, we?re much happier to consume pornography in a somewhat random, transactional manner. We are ok with photos of actors we?ve never seen before, and we don?t need a palpable plot to tie the pictures together. We can happily consume a stream of random, unconnected images (ala Tumblr). We do not mind low production quality in our pornography.

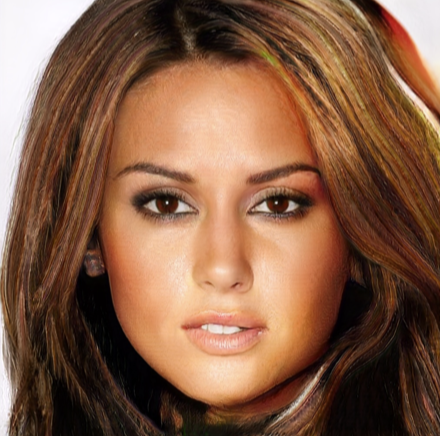

Not real.. she?s a GAN

Not real.. she?s a GAN

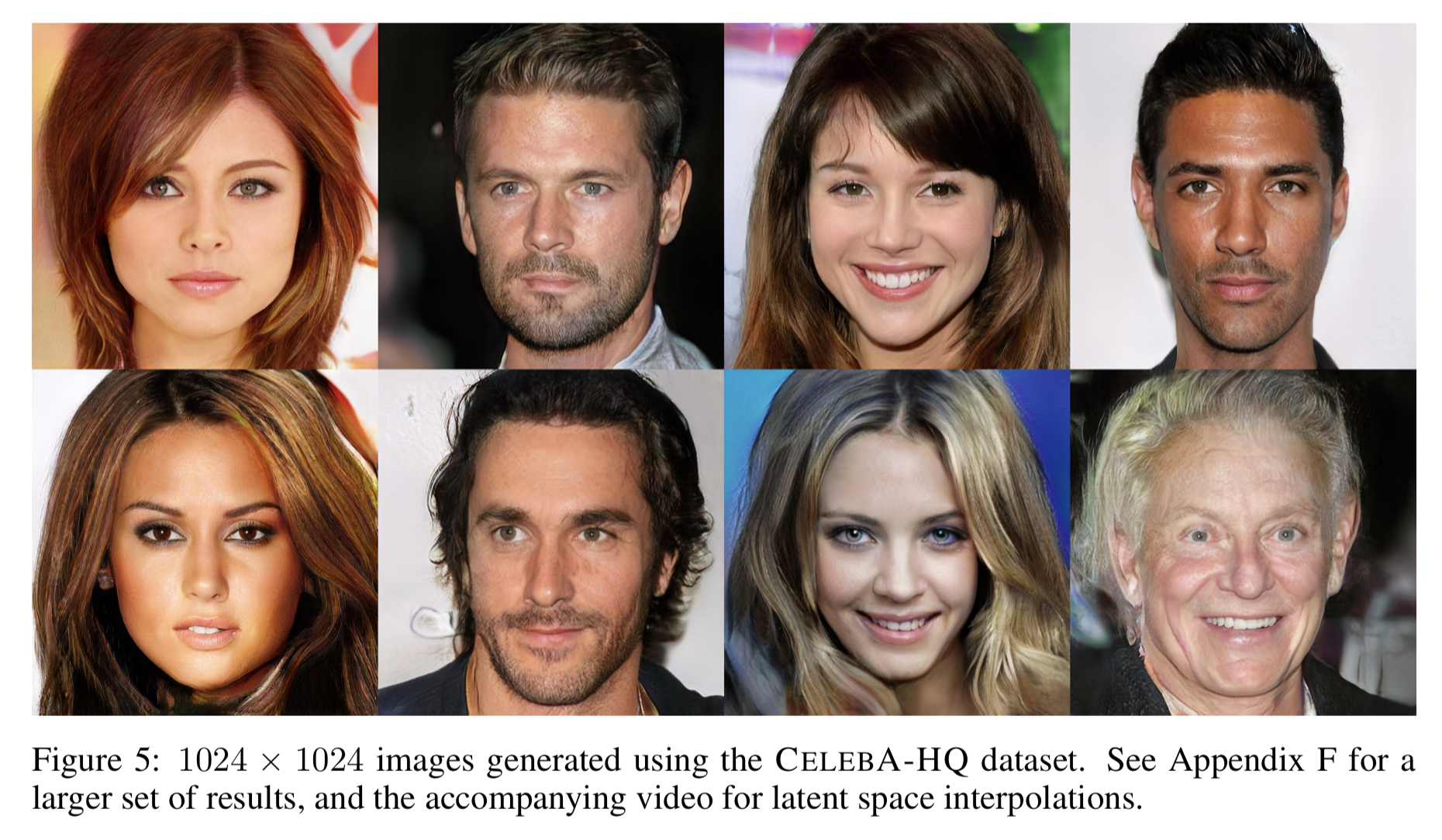

Generative Adversarial Networks (GANs) have been making headlines recently with their abilities. Most notably, to generate high-resolution pictures of imaginary celebrities? faces. Seeing this breathtaking realism encouraged me that now might be the time for AI-generated-porn to take stage.

Part II: The implementation

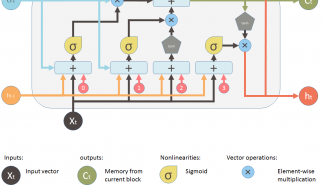

From the get-go there was one obvious type of model to employ in this project: a Generative Adversarial Network. These networks learn to generate images, transforming any supplied random number into an imagined image that the world has never seen before.

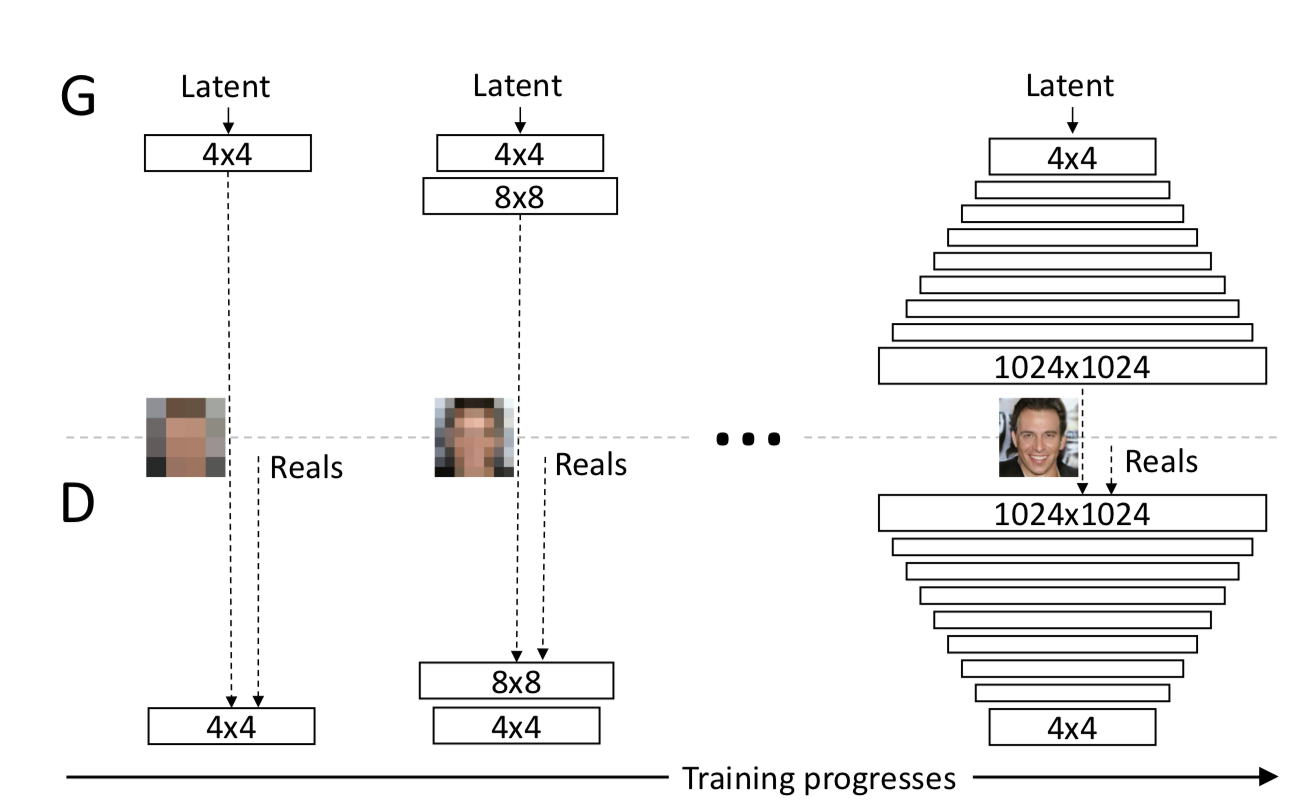

From the ProGAN paper

From the ProGAN paper

Following one of the most recent big successes, I adopted the Progressive Growing GAN (ProGAN) technology from Nvidia?s research team. Thanks to a well-tested codebase the paper?s authors published, I was able to quickly start experimenting.

Collecting the data

To train a GAN, you feed it images of the things you would be like to generate. It simultaneously tries to generate images (the Generative part of the name), and learns how to distinguish its own generated images from the real ones (a discriminator). These two networks, the generator and discriminator are pitted against each-other (that?s the Adversarial part of the name) like a cop and a robber. As one gets better, so does the other. In the end, the generator can be used by itself to generate as many images as you wish for.

Source

Source

To collect a dataset for this project, I needed to get a little more creative. I needed to get tens, or better hundreds of thousands of pornography images.

One way people collect datasets is to write web scrapers: little robots that trundle across the web collecting information from websites. I considered this, but it can take quite some time to get a web-scraper working really well. I also feared that pornography websites might have defenses against web scrapers.

Exploring more, I started hunting for BitTorrent pornography archives. Whilst many only had tens or hundreds of images, I finally found one with two hundred thousand. It contained photographs of women, almost entirely alone, in around twenty different poses. These photos were of unknown copyright and ethical origins, however I deferred solving those problems until I could prove this approach had any merit at all.

After a week of writing code that would take these images and prepare them into a format the ProGAN network could use, I was finally ready to start experimenting. I should note here that data-wrangling is often a big part of any machine learning effort ? and that was certainly true for this project.

First experiments

I used Google Cloud to host these experiments. I generally used a beefy high memory 10-core machine for data building and running the machine learning models. I attached 8 Nvidia Tesla GPUs to that machine.

NVidia Tesla GPU

NVidia Tesla GPU

With this many GPUs, the system would take only three days to train to produce 128×128 pixel images. Building these models is a good reminder that access to expensive hardware arrays is becoming the limiting bottleneck to producing cutting edge research. As someone who grew up in a world of cottage-industry game development, it?s sad to see the home programming hobbyist loose access to an important technology.

Progressive Growing GANs are named such as they grow the pyramid of image generating layers over time:

https://arxiv.org/abs/1710.10196

https://arxiv.org/abs/1710.10196

This growing of layers means that initially the network generates really small images, and slowly they gain resolution. This is hilariously reminiscent of old, slow internet connections, except here you wait for days for the image to gain full resolution.

Various runs failed for a range of tedious reasons (e.g. a simple variable was mis-named, or some files were in the wrong location). This was a good reminder to smoke-test my system before running it at full scale: i.e. run a version of it with fewer files, smaller batch size, fewer channels, smaller images, that is able to complete quickly, and check all the parts of the system work together.

Finally, the network got to convergence after three days on the full dataset. And lo and behold, the future of pornography was to be seen:

Enlarge for the full NSFW experience

Enlarge for the full NSFW experience

They were not quite there yet.

An attempt by the network that looked more like an abstract painting

An attempt by the network that looked more like an abstract painting

Working out why the model performed badly

The network struggled a lot with getting arms, legs, hands and faces in the right places. It had successfully learned their local textures, but not how to connect them all together. Sort of like a person who has only seen the world through a small hole.

At this point, I turned to the academic literature to find out what I had done wrong. As best I could tell, my network was the same layout as the original paper and my dataset was large enough and correctly prepared.

Reading the latest GAN papers closely, I discovered some subtle tricks. To generate the celebrity faces, all the training images had been carefully aligned to have eyes, nose and mouth in the same location ? the generator did not need to learn how to coordinate the facial features, it could always place them in the same location.

Put simply, celebrity face generation relied upon having a fairly homogenous dataset.

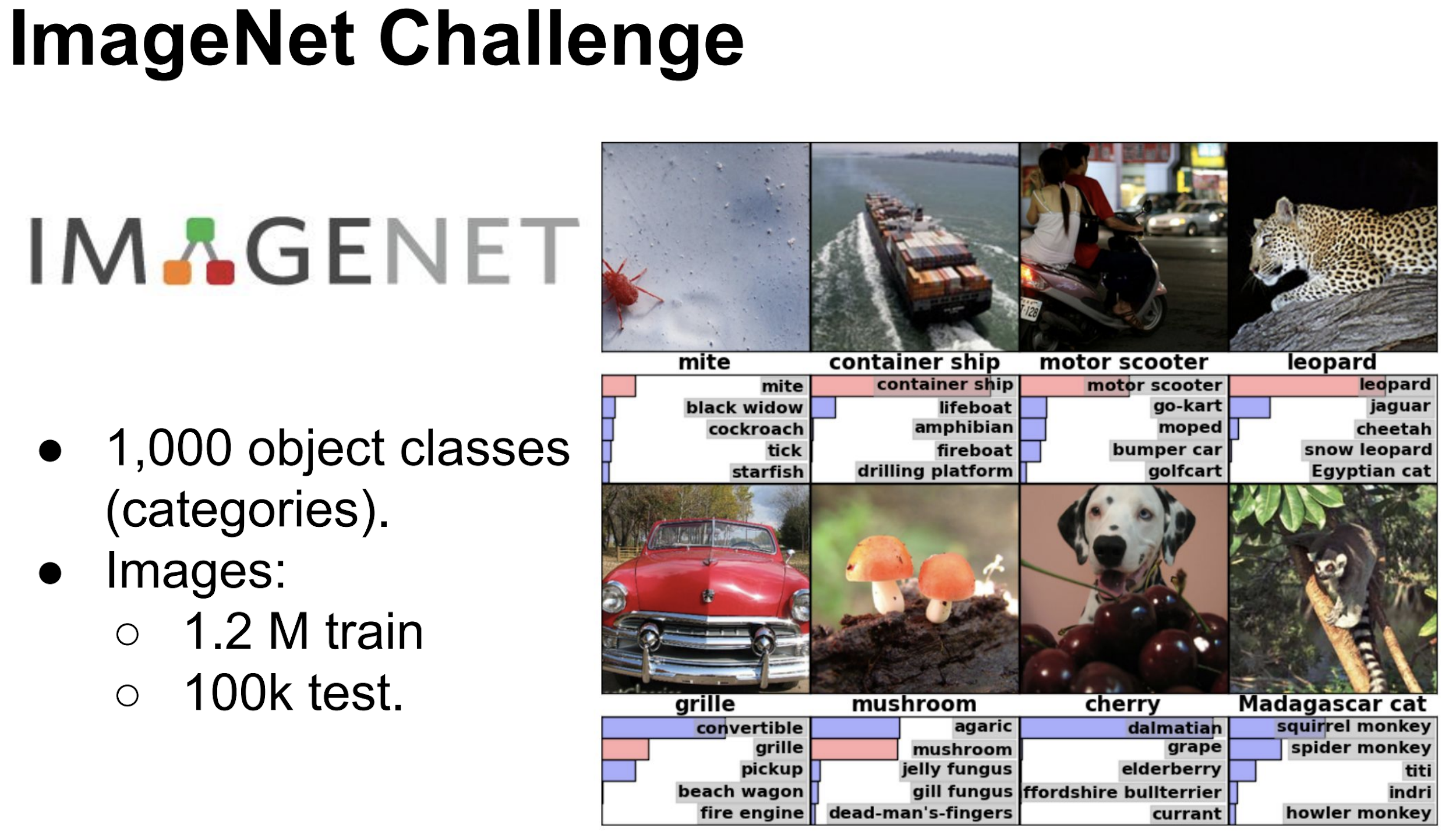

That explained the ProGAN?s performance on celebrity faces. But what about the other GANs that have learned to draw multiple objects like mushrooms, dogs and bananas?

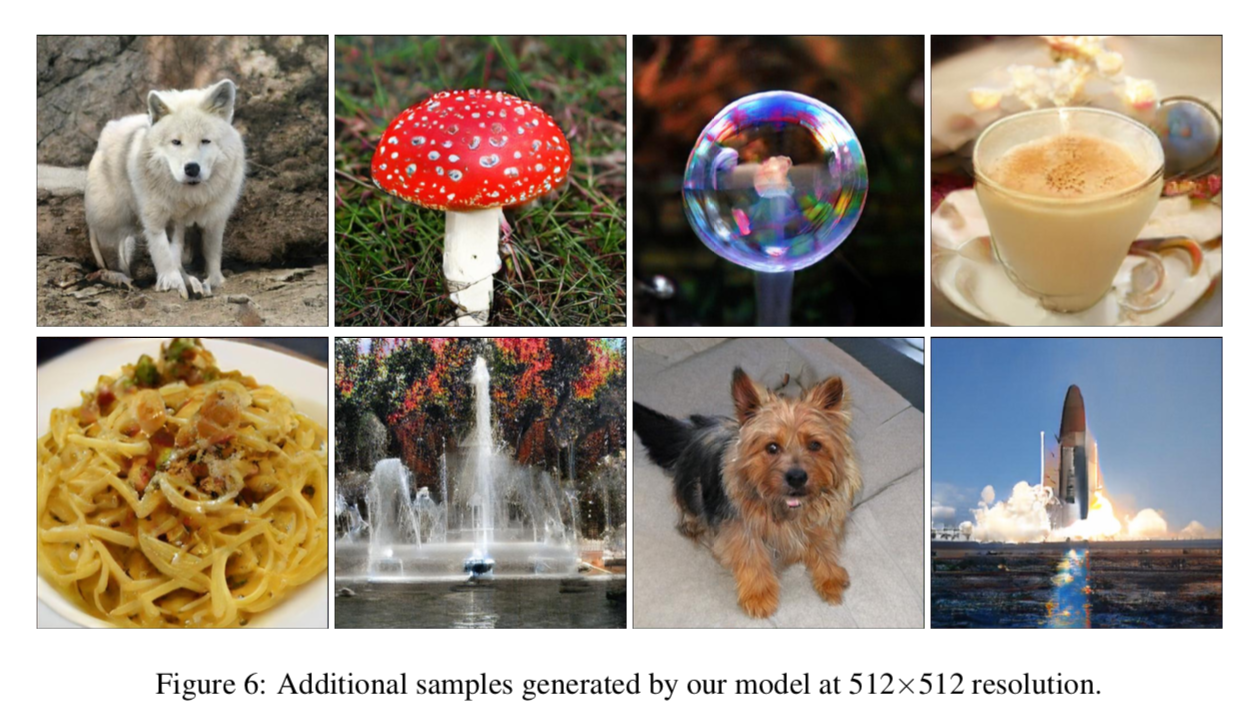

Nicely drawn pictures by BigGAN

Nicely drawn pictures by BigGAN

It turns out these GANs are conditioned on the training images? labels. That is, each image of a mushroom is labelled as such, and the GAN is told ?generate an image of a mushroom? and then the discriminator is asked to compare a real mushroom image to the generated mushroom image.

This extra data, the labels on the training images, makes a massive difference. Furthermore, many of the most successful image classes have that item in a regular position in the image. This all transforms a very heterogeneous dataset into a moderately homogeneous one.

Now it was clear that I needed to make my dataset more homogeneous. From a visual scan of the archive and observing the model?s generated outputs, I noticed one type of image it excelled on: a close up of a vagina. I reasoned that this was because that shot has a regular structure (e.g. where the legs are, where the tummy begins), just like the celebrity faces dataset.

Building a derivative dataset

I need to build a data subset with just that one pose.

Data scientists today are fortunate that pre-trained models are now easily downloaded and re-purposed. By taking a model trained on huge volumes of images (at great computational cost) and lightly re-training it to your task (?transfer learning?), you quickly have a pretty good tool.

I used TensorFlow Hub to employ a pre-trained ResNet. It had been trained on ImageNet (1.2m images of common animals and household objects). The model exposes its ?feature vectors?, 2048 dimensional vectors that when passed through a linear regression can classify the ImageNet images into one of 1000 different labels. For our model, we?ll pass the feature vectors through our own linear regression to instead classify our images into our labels.

We have our data (200,000 pornography images) but what about the labels? At this point, I had to roll up my sleeves and do some hand-labelling of the pornography images. After a while this became moderately unpleasant, and gave me question to whether I was ok with this project.

?Supervised? learning means that answer you want to get from the network is in the dataset (e.g. as labels). We often call this the ?ground truth?. You enter an input, ask the network to transform it into an output, then compare that output to your expected value, the ground truth. Any discrepancy is a ?loss? and the network?s parameters are updated to minimize that loss.

Manual labelling of the images was slow work, therefore I wanted to minimize the number of labels required. I spent an hour, categorizing images as ?close-up vagina pose? (label-1) or ?other pose? (label-0) then trained the aforementioned model on it. I withheld some of my labels to test the model. In the end, I had 300 label-1 and 962 label-0 images. Training was fast and accurate, achieving around 95% accuracy.

A bit of code later, and I?d a complete subset of 900 label-1 images. Ironically, I could spent as long coding as I would have needed to to manually extract the images, however the learning was valuable.

I fired up my dataset compiler, and got ready for the next training

Training, round two

I launch the training again, on my new dataset. And wait a few days to see the results.

Training is tantalizing. The early, low pixel results, reveal very little but attract my full speculation on whether the model is working.

I realize also that training makes for wonderful procrastination. It?s much easier to kick off a multi-day process than it is to scrutinize ones code and debug it. It?s also easier and more fun to write new model features than it is to write the unit tests for the existing ones.

A day later I start to see detailed results from the model. Its generated images actually look like vaginas:

Digging into the generated images, I find some that are less well formed:

These ones are weird, somewhat scary and alien like.

As a proof of concept, this model has just about managed to cross the line. The photos are low-resolution and many have deformations, but the basic concept worked.

What was causing the deformities

Analyzing the mis-formed images, some patterns emerged. They often contained fingers. And usually way too many fingers, and hands that didn?t connect to them. They sort of reminded me of the Alien franchise.

Thinking about this problem and the architecture of the ProGAN, I realized a fundamental limitation: The ProGAN stack of ever-wider convolutional layers have no way to coordinate their details. The early, coarse layers can lay down the signal for an area to grow into a specific texture, but the pieces of those textures are being developed independently.

One logical solution to this (which worked elsewhere like in text translation and image understanding) is self-attention, a method proposed by Ian Goodfellow et al for use in GANs.

Self-attention allows a model to ?look back? or ?look elsewhere? in the data to figure out what to do in its current location. For example, when translating French to Chinese, to choose the next Chinese word to output, it can look back at all the different input French words to help decide.

If the GAN could look at what else had been drawn to help determine what to draw next, perhaps that would help it coordinate fingers and similar tricky details.

It turned out that adding a single self-attention layer one third of the way through the generator helped it achieve state of the art image quality scores. I noted in my project journal to explore this for the next model.

Packaging up the model

I let the model sit and headed out to the mountains with my friends for some exercise and relaxation. I told them all of my project and where it?d got to. I showed them some results.

A friend giggled and said ?Have you seen ThisPersonDoesNotExist.com??. I smiled knowing what they were implying, and checked available domain names.

By the next evening the website was built. I re-ran my model and had it generate 10,000 images. I built a basic HTML and Javascript site to randomly serve the images. I uploaded it all, checked it worked, and went to bed.

ThisVaginaDoesNotExist.com was born.

Part III: The reception

Being in startup-land teaches you to ship early, and to try to get some attention for your work. I headed out to apply this approach to my new website. We reckoned it had some novelty and newsworthiness.

Monday morning, as people poured their first coffees, I began to share the website.

First, Hacker News. It got off to a good start, garnering votes and interesting comments. As it gained momentum and looked like it might creep onto front-page, BOOM- flagged by moderators. The link was redacted and the share withered.

I tried Product Hunt. The submission disappeared, I think because it didn?t meet their guidelines.

Next up, Reddit. We felt for sure there would be a home for it somewhere in this internet bazaar. We tried r/MachineLearning, r/Porn and r/WTF.

After posting and encouraging the submissions along, they all got flagged. Dead in the water, once again.

Simple social sharing, person to person, got a little momentum, but people invariably ran out of people they felt comfortable sharing this sort of NSFW material to. Also, AI-Porn-Startup-Prototype is a bit of a niche interest.

To explore the field some more, I also talked to some friends in the VC sphere. They said that due to their LPs? policies and reputations, their funds could not invest in the adult industry.

Part IV: Reflection

I felt at this point that I?d hit a dead end. Press and fundraising would be tough and require some extra creativity and force. I spoke to friends about hiring them, and had polarized answers. Overall, this project had become less appealing.

Also, when talking to my friends, their enthusiasm varied. Some were outright against the project, some had ethical concerns.

For my part, I thought a lot about the ethics involved. There were many things to consider.

At the macro view, if this project were a success, it could improve the world. Many people are harmed in the production of pornography, and this project (given the very low cost of producing new images) would supplant them. Pornography would harm fewer people.

On the other side, this would displace workers and reshape an industry. I believe that these people would find new work, and also that the transition could be difficult. The industry has faced many challenges over the past decade from digital technology and piracy, and it?s already become a difficult place to make money or a career in.

The consumption of porn may be harmful. Researching online, it was hard to find a clear, unbiased view on this. Uncertain of the situation here, I decided that producing non-violent porn of a consensual nature, and including messages encouraging positive sexual attitudes, would be enough to breakeven on this ethical concern. At least until more research can be found.

Finally, there is the issue of the training images ? they have come from real actors, who may be experiencing harm. To mitigate this, my plan was to seek out pornography that had been ethically sourced.

Reflecting on my earlier experience of trying to share the project, it felt like I?d struck a corner of hypocrisy in our society and technology ? pornography is a major part of internet usage and people?s daily lives, yet we?re not comfortable talking about it. Here was a fledgling technology that could improve the situation, but we moderate and bury it.

Finally, checking in with myself, this no longer felt like an industry and line of work I wanted to be in. Working with the content felt unpleasant. The various ethical issues and inevitable judgement and stigma didn?t feel worth fighting.

Overall, this had been a fascinating dive into an entirely different area of the world from what I knew. I learned a lot, about technology, society and myself.

Part V: Postlude

Having stepped back from the project, I realized another facet of it. The ways that my models struggled are indeed ways that the current state of the art (BigGAN) also struggles:

BigGAN includes the self-attention mechanism mentioned earlier, yet still has problems coordinating fine details.

My foray into pornography had circled me back around into academic research.

I?m working now on a series of experiments to try and take self-attention further. I want to see if refinements to the model can solve the visual problems seen in the above baseball players. After that, who knows.