This is a very easy tutorial that will let you install Spark in your Windows PC without using Docker.

By the end of the tutorial you?ll be able to use Spark with Scala or Python.

Before we begin:

It?s important that you replace all the paths that include the folder ?Program Files? or ?Program Files (x86)? as explained below to avoid future problems when running Spark.

If you have Java already installed, you still need to fix the JAVA_HOME and PATH variables

- Replace ?Program Files? with ?Progra~1?

- Replace ?Program Files (x86)? with ?Progra~2?

- Example: ?C:Program FIlesJavajdk1.8.0_161? –> ?C:Progra~1Javajdk1.8.0_161?

1. Prerequisite ? Java 8

Before you start make sure you have Java 8 installed and the environment variables correctly defined:

- Download Java JDK 8 from Java?s official website

- Set the following environment variables:

- JAVA_HOME = C:Progra~1Javajdk1.8.0_161

- PATH += C:Progra~1Javajdk1.8.0_161bin

- Optional: _JAVA_OPTIONS = -Xmx512M -Xms512M (To avoid common Java Heap Memory problems whith Spark)

Tip: Progra~1 is the shortened path for ?Program Files?.

2. Spark: Download and Install

- Download Spark from Spark?s official website

- Choose the newest release (2.3.0 in my case)

- Choose the newest package type (Pre-built for Hadoop 2.7 or later in my case)

- Download the .tgz file

2. Extract the .tgz file into D:Spark

Note: In this guide I?ll be using my D drive but obviously you can use the C drive also

3. Set the environment variables:

- SPARK_HOME = D:Sparkspark-2.3.0-bin-hadoop2.7

- PATH += D:Sparkspark-2.3.0-bin-hadoop2.7bin

3. Spark: Some more stuff (winutils)

- Download winutils.exe from here: https://github.com/steveloughran/winutils

- Choose the same version as the package type you choose for the Spark .tgz file you chose in section 2 ?Spark: Download and Install? (in my case: hadoop-2.7.1)

- You need to navigate inside the hadoop-X.X.X folder, and inside the bin folder you will find winutils.exe

- If you chose the same version as me (hadoop-2.7.1) here is the direct link: https://github.com/steveloughran/winutils/blob/master/hadoop-2.7.1/bin/winutils.exe

2. Move the winutils.exe file to the bin folder inside SPARK_HOME,

- In my case: D:Sparkspark-2.3.0-bin-hadoop2.7bin

3. Set the folowing environment variable to be the same as SPARK_HOME:

- HADOOP_HOME = D:Sparkspark-2.3.0-bin-hadoop2.7

4. Optional: Some tweaks to avoid future errors

This step is optional but I highly recommend you do it. It fixed some bugs I had after installing Spark.

Hive Permissions Bug

- Create the folder D:tmphive

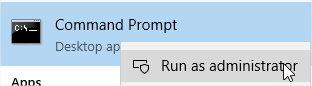

- Execute the following command in cmd started using the option Run as administrator

- cmd> winutils.exe chmod -R 777 D:tmphive

3. Check the permissions

- cmd> winutils.exe ls -F D:tmphive

5. Optional: Install Scala

If you are planning on using Scala instead of Python for programming in Spark, follow this steps:

1. Download Scala from their official website

- Download the Scala binaries for Windows (scala-2.12.4.msi in my case)

2. Install Scala from the .msi file

3. Set the environment variables:

- SCALA_HOME = C:Progra~2scala

- PATH += C:Progra~2scalabin

Tip: Progra~2 is the shortened path for ?Program Files (x86)?.

4. Check if scala is working by running the following command in the cmd

- cmd> scala -version

Testing Spark

PySpark (Spark with Python)

To test if Spark was succesfully installed, run the following code from pyspark?s shell (you can ignore the WARN messages):

Scala-shell

To test if Scala and Spark where succesfully installed, run the following code from spark-shell (Only if you installed Scala in your computer):

PD: The query will not work if you have more than one spark-shell instance open

PySpark with PyCharm (Python 3.x)

If you have PyCharm installed, you can also write a ?Hello World? program to test PySpark

PySpark with Jupyter Notebook

This tutorial was taken from: Get Started with PySpark and Jupyter Notebook in 3 Minutes

You will need to use the findSpark package to make a Spark Context available in your code. This package is not specific to Jupyter Notebook, you can use it in you IDE too.

Install and launch the notebook from the cmd:

Create a new Python notebook and write the following at the beginning of the script:

Now you can add your code to the bottom of the script and run the notebook.