When the co-founder of your school explains to you in less than 15 minutes how to solve an advanced problem from the curriculum, which you?ve had little experience or knowledge on the matter, then asks you to write a blog post about how the OS of a machine finds the maximum and minimum values for the integer data type, you happily oblige.

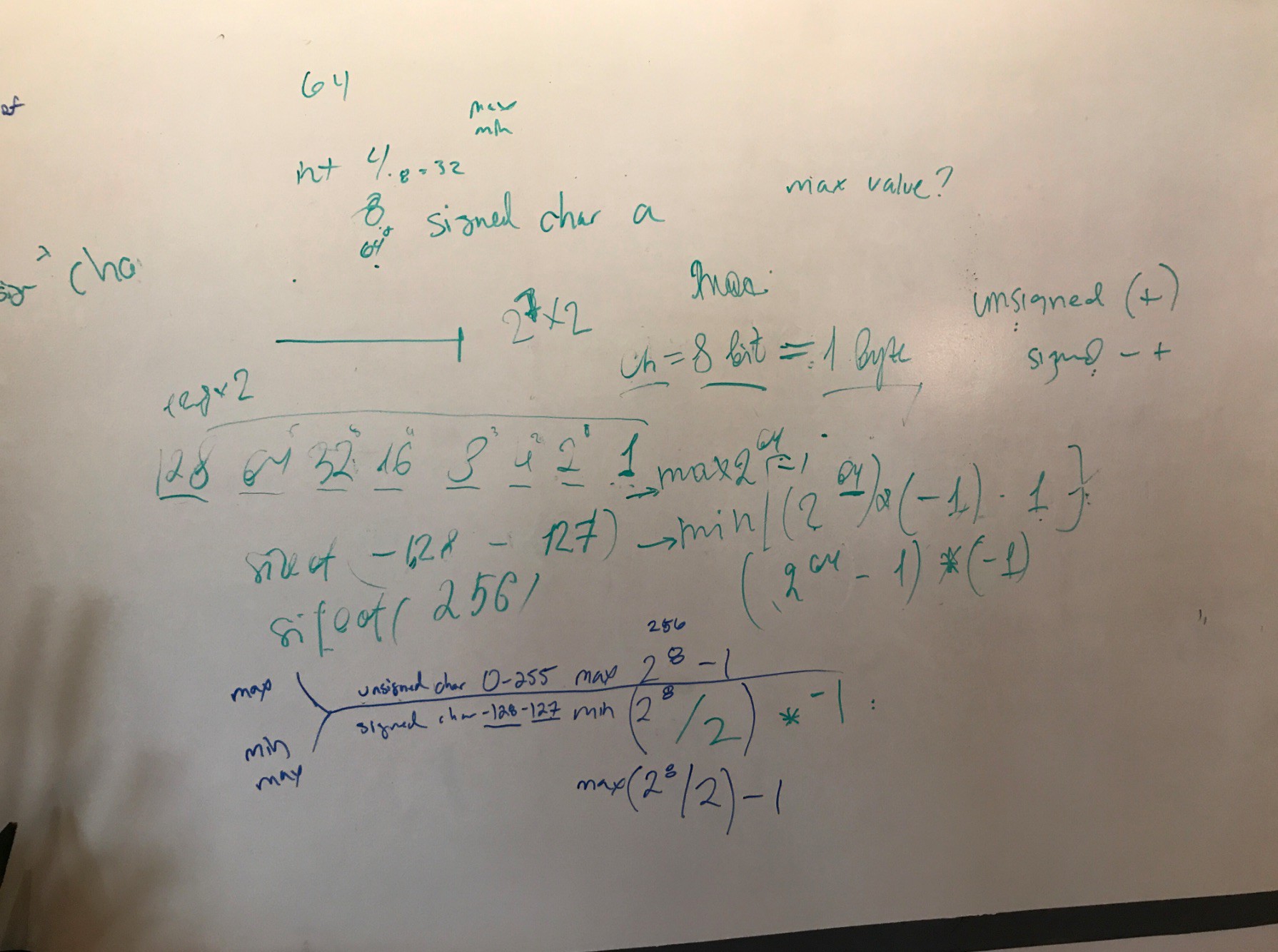

If Julien is reading this, the pseudo code, which is chicken scratch at this point, is my work not the work of another student. Taking pictures of other students code is strongly not recommended. Whiteboarding, on the other hand, is the bedrock of learning how to write programs.

If Julien is reading this, the pseudo code, which is chicken scratch at this point, is my work not the work of another student. Taking pictures of other students code is strongly not recommended. Whiteboarding, on the other hand, is the bedrock of learning how to write programs.

To answer the question, first, we have to understand what data types exist in the computer language you are using (C for this example), figure how many bytes are in each data type, then figure out if the application was built for a 32-bit or 64-bit processor.

TL;DR

In the picture above, there?s an equation you can use in your C program. I?ve decoded the chicken scratch in the picture above into an equation you can use below. You can also compile and run this file to find the data type sizes on a 32-bit vs 64-bit processor.

Unsigned data types:

int max = pow(2, number of bits assigned to data type) ? 1;

Signed data types:

int min = (pow(2, number of bits assigned to data type) / 2) * -1;

int max = (pow(2, number of bits assigned to data type) / 2) ? 1;

Explained:

What data types exist in the C language?

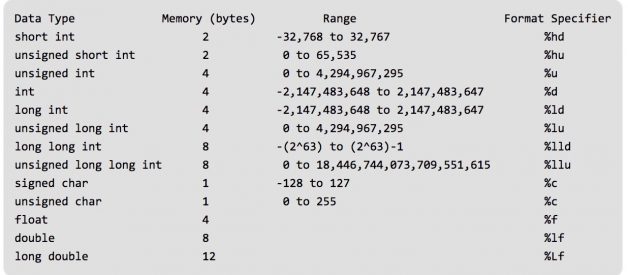

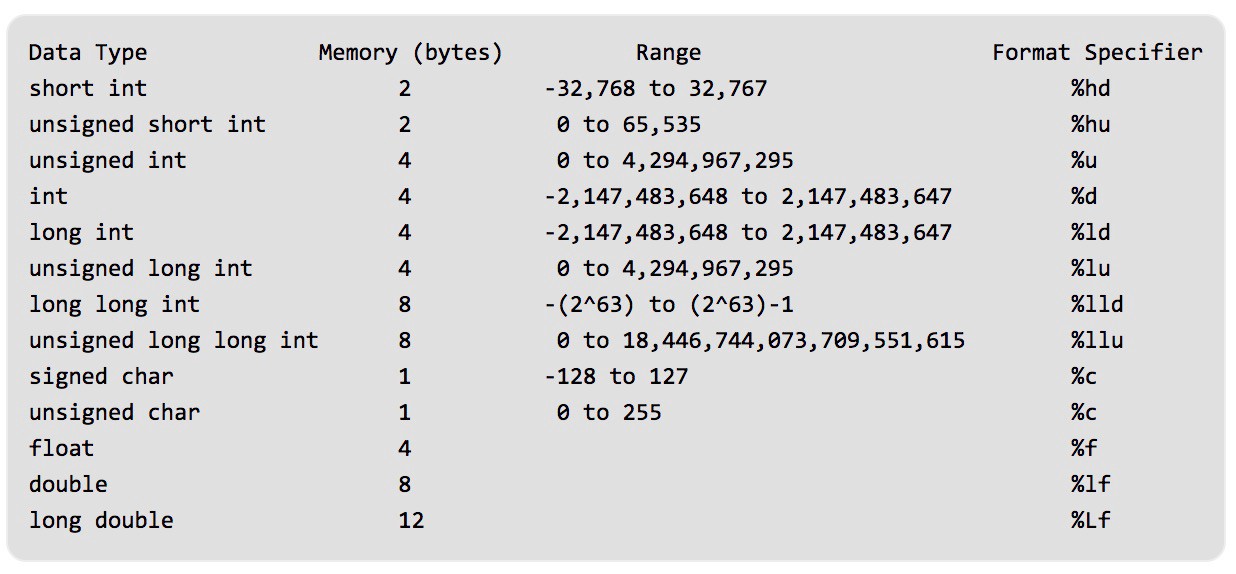

GeeksforGeeks has a great article on all data types in the C language. Without going into the architecture of how the C language was built, there are ranges of values assigned to each data type. When reading through the picture below, you might recognize a pattern.

Photo credit : GeeksforGeeks.

Photo credit : GeeksforGeeks.

The word int and char might stand out, along with the words signed and unsigned. The word int is shorthand for integer and char is short hand for character. When assigning integer values to data types in C, there are ranges of values used in the C computer language. A short int which has two bytes of memory, has a minimum value range of -32,768 and a maximum value range of 32,767. An unsigned short int, unsigned meaning having no negative sign (-), has a minimum range of 0 and a maximum range of 65,535.

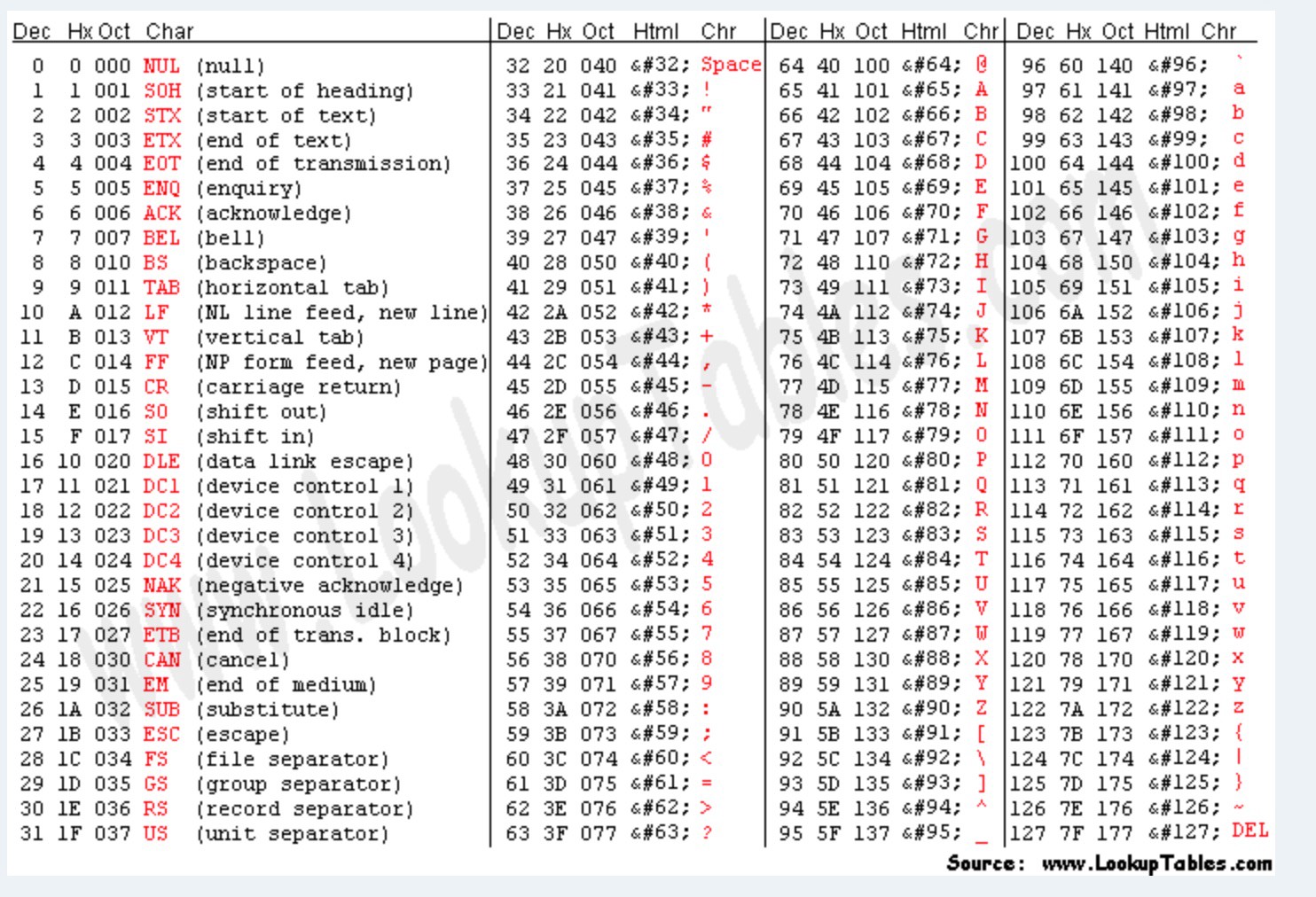

Photo Credit: ASCIItable.com

Photo Credit: ASCIItable.com

Integer data types are simply expressed as integers within a specific range using the equation above. char data types on the other hand are character representations of numbers referencing the ASCII table. Take a look at the image of the ASCII table above. ASCII stands for American Standard Code for Information Interchange. Computers make computations with numbers, so an ASCII code is the numerical representation of a character such as the character ?a?, ?x? or ?!?.

When you declare a variable in your program such as

char first_initial = ‘N’;

you are assigning the ASCII table integer value of 78, instead of the letter ‘N’ to the variable first_initial. The computer internalizes it as the base 10, decimal integer value of 78, which then gets printed to the terminal as the character value of capital N by using a function like putchar(78). As a software engineer, you have to make conscious decisions on what data types you want to assign in your application based off their memory usage and the types of problems you are trying to solve.

How many bites are in each datatype?

If you reference the GeeksforGeeks table again, the C language has a predefined number of memory slots (bytes) assigned to each data type. In the C language there are 8 bits in 1 byte. Why were 8 bits assigned to 1 byte in the C language?

Let?s refer back to our assignment of the capital letter ‘N’ to our variable. When we assign the ‘N’ to the char data type, we are assigning 1 byte of memory to the variable first_initial.

char is 1 byte in C because it is specified so in standards. That?s because the binary representation of a char data type can fit into 1 byte based. At the time of the primary development of C, the most commonly available character standards were ASCII and EBCDIC which needed 7 and 8 bit encoding. 1 byte was sufficient to represent the whole character set.

?What?s the binary representation of a char data type??, you ask.

You know the famous matrix picture with all the 0?s and 1?s that surround Neo when he?s in his epic battle? Those 0?s and 1?s are formatted in Binary representation.

You know the famous matrix picture with all the 0?s and 1?s that surround Neo when he?s in his epic battle? Those 0?s and 1?s are formatted in Binary representation.

Binary representation is a base-2 number system that uses two mutually exclusive states to represent information. Binary representation is a pattern of numbers designed for computers to understand.

What does this binary number represent in decimal notation?

0000 0110

The answer is six. How did I come that conclusion? Think of each numeric value represented as a bit. In the numerical sequence above, there are eight numerical values, either 0 or 1, meaning there are eight bits. Starting from the furthest digit on the right, we count leftward using the equation:

2 ^ 0 which is 2 to the 0th power.

The second number would be 2 to the 1st power, followed by 2 to the 2nd power, until we end up at the most left number, which would be 2 to the 7th power. Below is a simple display that shows the decimal representation for each binary number position.

128 64 32 16 8 4 2 1

0 0 0 0 0 1 1 0

Again, looking at the binary representation, we can only use a 1 or 0 value representing present or not present because binary is mutually exclusive. If we have a 1 in the value, then we add it to the total that we want to represent in decimal notation. In our example, we see that we have a 1 in the 2nd decimal notation and another 1 in the 3rd decimal notation. We get 6 from adding 2+ 4.

Another useful numerical representation for computers is hexadecimal representation. Hexadecimal representation is another pattern of numbers that represent a color value to the computer using a specific numbering pattern such as #FF5733, which is orange.

So what would be the decimal representation of:

0110 0111 or 0011 1111 ?

Tying it all together

How do you get the maximum and minimum values for integer data types based off the operating system in C? Use the equation below.

Unsigned data types:

int max = pow(2, number of bits assigned to data types) ? 1;

Signed data types:

int min = (pow(2, number of bits assigned to data types) / 2) * -1;

int max = (pow(2, number of bits assigned to data types) / 2) ? 1;

Let?s use the unsigned short int data type with a max range of 65,535. We can find it two ways. The easiest way would be using the equation above. To find the max value for the unsigned integer data type, we take 2 to the power of 16 and substract by 1, which would is 65,535. We get the number 16 from taking the number of bytes that assigned to the unsigned short int data type (2) and multiple it by the number of bits assigned to each byte (8) and get 16.

Another way of finding out the maximum value for the unsigned short int data type in C is using binary representation. We know that there are 16 bits in the unsigned short int data type from the example above. If we put that into the base 2, binary representation, it would look something like this.

1111 1111 1111 1111

In decimal notation, that would be (1 + 2 + 4 + 8) +(16 + 32 + 64 + 128) + (256 + 512 + 1024 + 2048) + (4096 + 8192 + 16384 + 32768) which is 65,535.

The way we represent a signed short int is different than the way we represent a unsigned short int. We have to take into account that unsigned short ints are only positive numbers. When use binary representation for positive numbers, each bit that is a 1 is added to the decimal value representation.

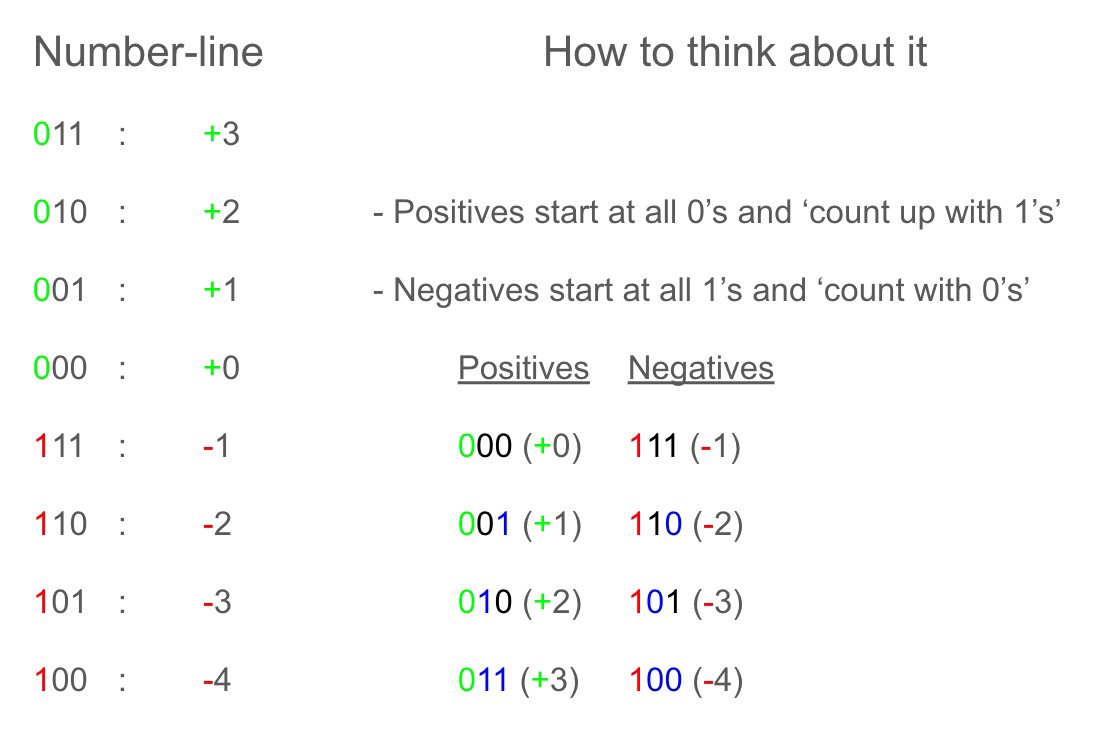

Negative numbers?s for 3 bit signed integers. Photo credit Isaiah Becker-Mayer

Negative numbers?s for 3 bit signed integers. Photo credit Isaiah Becker-Mayer

When we want to use a signed data types using binary representation, if the first bit is 0, the number is positive and if the first bit is 1, the number is negative, but we count the numbers differently.

We count negative numbers differently. Instead of starting with all 0?s and count the 1?s, we start with all 1?s and count the 0?s starting at the -1. Take a look at this signed short int example above:

1000 0000 0000 0000

This example above is -1 + -32,767 , which is -32,768in decimal notation and is the minimum value for signed short int data types. Again, negatives for signed data types start with all 1?s and count the 0?s. Look at the example below.

1111 1111 1111 1100

The example above is -4 in decimal notation. Now you know how to find the maximum and minimum values in binary notation the values of unsigned and signed data types.

32-bit vs. 64-bit processors

There are two fundamentally different architectures manufactures of computer processors use, 32-bit and 64-bit. If you?d like to read more on the applicable differences between the two, read this article by Jon Martindale.

?The number of bits in a processor refers to the size of the data types that it handles and the size of its registry. Simply put, a 64-bit processor is more capable than a 32-bit processor because it can handle more data at once. A 64-bit processor is capable of storing more computational values, including memory addresses, which means it?s able to access over four billion times as much physical memory than a 32-bit processor. That?s just as big as it sounds. 64-bit processors are to 32-bit processors what the automobile is to the horse-drawn buggy.?

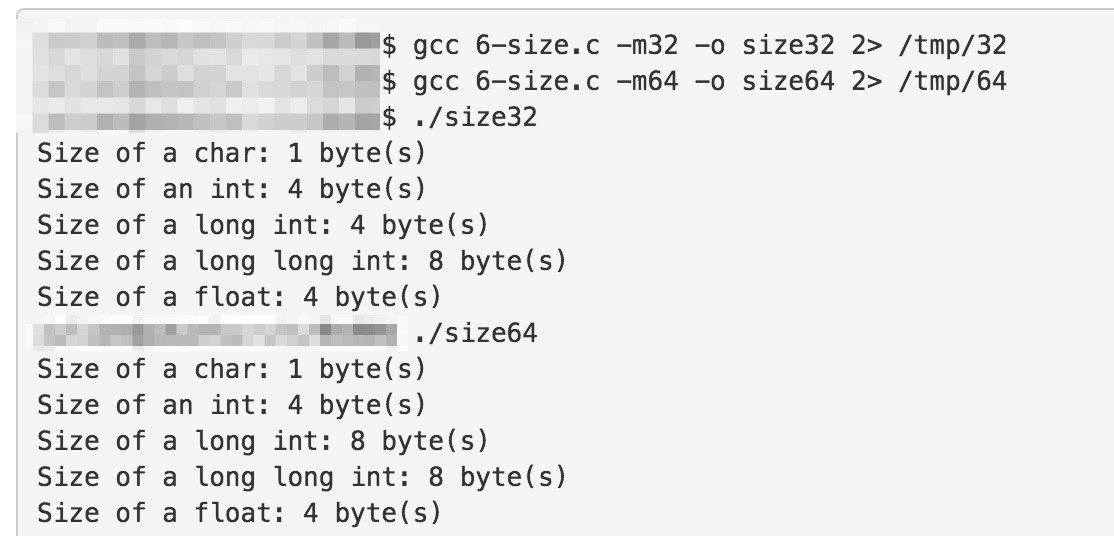

Depending on what OS you are running your program on, the C language assigns different bytes to different data types. Compile and run the executable file from this file. It will print out something like this:

This shows that there are different byte assignments to data types that run on 32-bit processors vs 64-bit processors.

If you have any questions or comments, feel free to add them below. You can follow me on twitter @ NTTL_LTTN. Thanks for your time.