On November 1, 2016, I started a year-long project, challenging myself to master one expert-level skill every month. For the first eleven months, I succeeded at each of the challenges:

I landed a standing backflip, learned to draw realistic portraits, solved a Rubik?s Cube in 17 seconds, played a five-minute improvisational blues guitar solo, held a 30-minute conversation in a foreign language, built the software part of a self-driving car, developed musical perfect pitch, solved a Saturday New York Times crossword puzzle, memorized the order of a deck of cards in less than two minutes (the threshold to be considered a grandmaster of memory), completed one set of 40 pull-ups, and continuously freestyle rapped for 3 minutes.

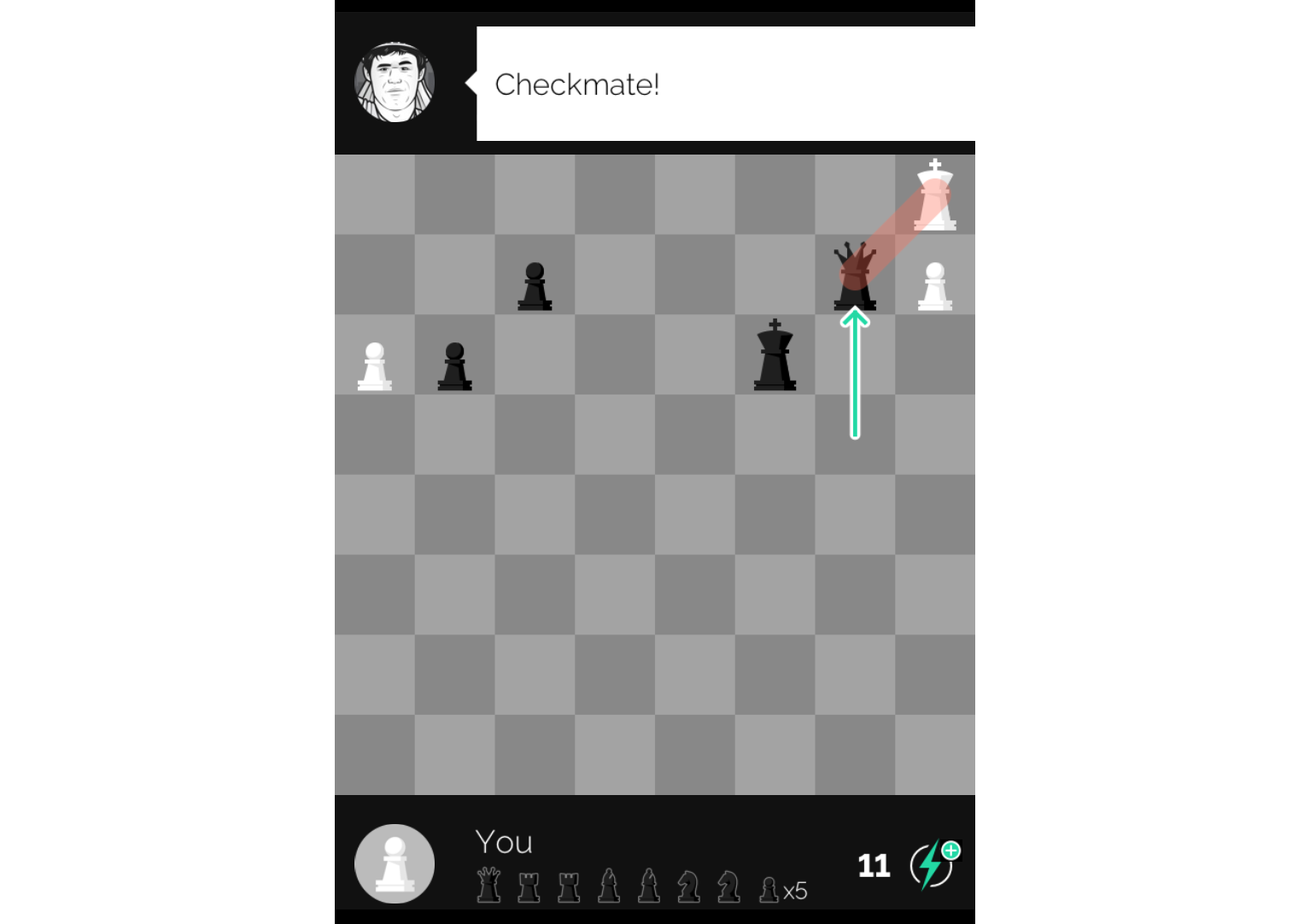

Then, through a sequence of random events, I was offered the chance to sit down with the world chess champion Magnus Carlsen in Hamburg, Germany for an in-person chess game.

I accepted. How could I not?

And so, this became my twelfth and final challenge: With a little over one month of preparations, could I defeat world champion Magnus Carlsen at a game of chess?

Unlike my previous challenges, this one was near impossible.

I had selected all of my other challenges to be aggressively ambitious, but also optimistically feasible in a 30-day timespan. I set the challenges with the hope that I would succeed at 75% of them (I just wasn?t sure which 75%).

On the other hand, even if I had unlimited time, this challenge would still be dangerously difficult: The second best chess player in the world has a hard time defeating Magnus, and he?s devoted his entire life to the game. How could I possibly expect to have even a remote chance?

Truthfully, I didn?t. At least, I didn?t if I planned to learn chess like everybody else in the history of chess has.

But, this offered an interesting opportunity: Unlike my other challenges, where success was ambitiously in reach, could I take on a completely impossible challenge, and see if I could come up with a radical approach, rendering the challenge a little less impossible?

I wasn?t sure if I could, or not. But, I thought it would be fun to try.

I documented my entire process from start to finish through a series of daily blog posts, which are compiled here into a single narrative. In this article, you can relive my month of insights, frustrations, learning hacks, triumphs, and failures as I attempt the impossible.

At the end of this post, I share the video of the actual game against Magnus.

But first, let me walk you through how I prepared for the game, starting on October 1, 2017, when I had absolutely no plan. Just the desire to try.

Note: I was asked not to reveal the details of the actual game until after it happened and it was written about in the Wall Street Journal (the article is also linked at the end of this post). Thus, in order to document my journey via daily blog posts, without spoiling the game, I used the Play Magnus app for a bit of misdirection while framing up the narrative. However, I tried to write the posts so that, after the game happened and readers have knowledge of the match, the posts would still read naturally and normally.

Today, I begin the final month and challenge of my M2M project: Can I defeat world champion Magnus Carlsen at a game of chess?

The most immediate question is ?How will you actually be able to play Magnus Carlsen, #1-rated chess player in the world, at a game of chess??

Well, Magnus and his team have released an app called Play Magnus, which features a chess computer that is meant to simulate Magnus as an opponent. In fact, Magnus and team have digitally reconstructed Magnus?s playing style at every age from Age 5 until Age 26 (Magnus?s current age) by using records of his past games.

I will use the Play Magnus app to train, with the goal of defeating Magnus at his current age of 26. Specifically, I hope to do this while playing with the white pieces (which means I get to move first).

My starting point

My dad taught me the rules of chess when I was a kid, and we probably played a game or two per year when I was growing up.

Three years ago, during my senior year at Brown, I first downloaded the Play Magnus app and occasionally played against the computer with limited success.

In the past year, I?ve played a handful of casual games with equally-amateurish friends.

In other words, I?ve played chess before, but I?m definitely not a competitive player, nor do I have any idea what my chess rating would be (chess players are given numeric ratings based on their performance against other players).

This morning, I played five games against the Play Magnus app, winning against Magnus Age 7, winning and losing against Magnus Age 7.5, and winning and losing against Magnus Age 8.

Then, tonight, I filmed a few more games, winning against Magnus 7, Magnus 7.5, and Magnus 8 in a row, and then losing to Magnus 9. (There?s no 8.5 level).

It seems that my current level is somewhere around Magnus Age 8 or Age 9, which is clearly quite far from Magnus 26.

As reference, Magnus became a grandmaster at Age 13.

An extra week

While every challenge of my M2M project has lasted for exactly a month, this challenge is going to be slightly different ? although not by much. Rather than ending on October 31, I will be ending this challenge on November 9 (Updated to November 17).

I?d prefer to keep this challenge strictly contained within the month of October, but I think it?s going to be worth bending the format slightly.

Later in the month, I?ll explain why I?ve decided to adjust the format. For now, I can?t say much more.

If anything, I can use the extra training time, especially since this challenge is likely my most ambitious.

Anyway, tomorrow, I?ll start trying to figure out how I?m going to pull this off.

Yesterday, to test my current chess abilities, I played a few games against the Play Magnus app at different age levels (from Age 7 to Age 9).

While these games gave me a rough sense of my starting point, they don?t give me a clear, quantitative way to track my day-over-day progress. Thus, today, I decided to try and compute my numeric chess rating.

As I mentioned yesterday, chess players are given numeric ratings based on their performance against other players. The idea is that, given two players with known ratings, the outcome of a match should be probabilistically predictable.

For example, using the most popular rating system, called Elo, a player whose rating is 100 points greater than their opponent?s is expected to win 64% of their matches. If the difference is 200 points, then the stronger player is expected to win 76% of matches.

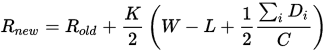

With each win or loss in a match, a player?s rating is updated, based on the following (semi-arbitrary, but agreed upon) Elo equation:

Rnew and Rold are the player?s new and old rating respectively, Di is the opponent?s rating minus the player?s rating, W is the number of wins, L is the number of losses, C = 200 and K = 32.

Rnew and Rold are the player?s new and old rating respectively, Di is the opponent?s rating minus the player?s rating, W is the number of wins, L is the number of losses, C = 200 and K = 32.

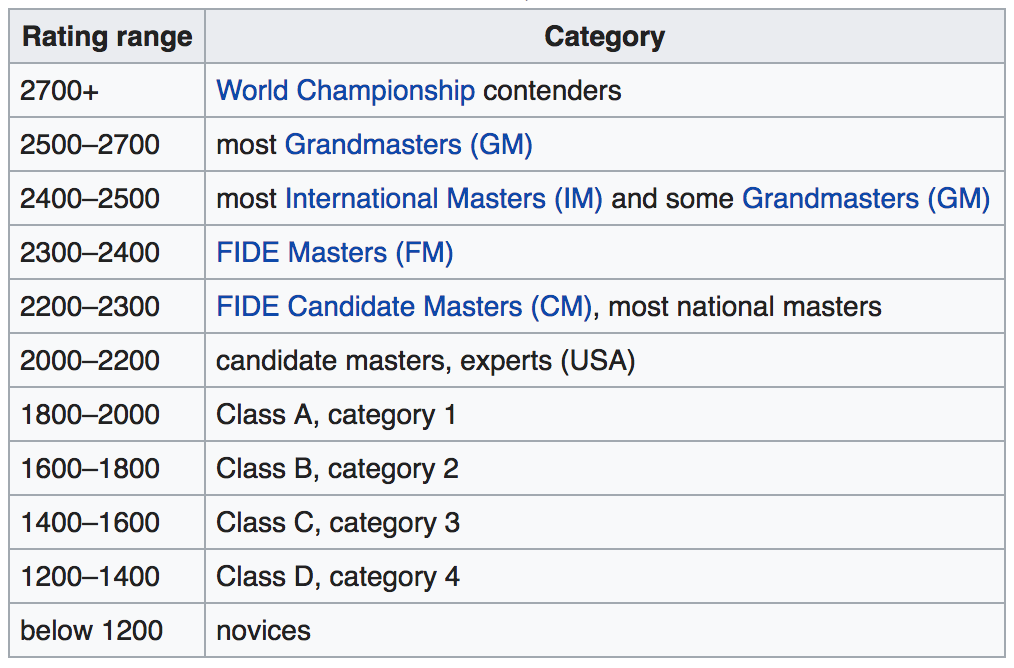

More interestingly, here?s how particular ratings correspond to categories of players (according to Wikipedia). Notably, a grandmaster has a rating around 2500 and a novice is below 1200.

Additionally, according to the United States Chess Federation, a beginner is usually around 800, a mid-level player is around 1600, and a professional, around 2400.

Magnus is currently rated at 2826, and has achieved the highest rating ever at 2882 in May 2014.

My current rating is somewhere around 1100, putting me squarely in the novice category. To determine this rating, I played a number of games today on Chess.com, which maintains and computes ratings for all the players on the site.

Chess.com is actually really cool: At the click of a button, you are instantly matched with another chess player of similar skill level from anywhere in the world.

Chess.com doesn?t use the Elo rating system, but, according to the forums, my Chess.com rating should be roughly equal to my Elo rating. In other words, I?m definitely an amateur.

So, over the next month, I just need to figure out how to boost my rating from 1100 to 2700?2800.

I?m still not sure exactly how to do this, or if it?s even possible (I?m almost certain no one has even made this kind of jump even in the span of five years), but I?m going to give it my best shot.

The good news about starting in amateur territory is there?s really only one direction to go?

Doesn?t this seem like a bad way to finish your project?? says most of my friends when discussing this month?s challenge. ?It seems like it?s effectively impossible. Isn?t it anticlimactic if you fail on the last challenge, especially after eleven months of only successes??

These friend do have a point: This month?s challenge (defeating Magnus Carlsen at a game of chess) is dancing on the boundary between what?s possible and what?s not.

However, for this exact reason, I see this month as the best possible way to finish off this project. Let me explain?

There are two ways you can live your life: 1. Always succeeding by playing exclusively in your comfort zone and shying away from the boundary of your personal limits, or 2. Aggressively pursuing and finding your personal limits by hitting them head on, resulting in what may be perceived as ?failure?.

In fact, failure is the best possible signal, as it is the only way to truly identify your limits. So, if you want to become the best version of yourself, you should want to hit the point of failure.

Thus, my hope with this project was to pick ambitious goals that rubbed right up against this failure point, pushing me to grow and discover the outer limits of my abilities.

In this way, I failed: My ?successful? track record means that I didn?t pick ambitious enough goals, and that I left some amount of personal growth on the table.

In fact, most of the time, when I completed my goal for the month, I was weirdly disappointed. For example, here?s a video where I land my very first backflip and then try to convince my coach that it doesn?t count.

In other words, my drug of choice is the constant, day-over-day pursuit of mastery, not the discrete moment in time when a particular goal is reached. As a result, I can maximize the amount of this drug I get to enjoy by taking my pursuits all the way to the boundary of my abilities and to the point of failure.

Therefore, while this month?s challenge is a bit far-fetched, it?s the realest embodiment of what this project is all about. By attempting to defeat Magnus as my last challenge, I hope to fully embrace the idea that failure is the purest signal of personal growth.

Now, with that said, I?m going to do everything I possibly can to beat Magnus. I?m not planning for failure. I?m playing for the win.

But, I do understand what I?m up against: Even the second best chess player in the world struggles to win against Magnus.

So for me, it isn?t about winning or losing, but instead, it?s about the pursuit of the win. It?s about the fight.

The outcome doesn?t dictate success. The quality of the fight does.

And this month, I?m set up for the best and biggest fight of the entire year.

In the past few days, I?ve played many games of chess on Chess.com and spent a lot of time researching the game, approaches to learning, etc. The hope is that I can find some new insight that will enable me to greatly accelerate my learning speed.

So far, I?ve yet to find this insight.

Chess is a particularly hard game ?to fake? because, in almost all cases, the better player is simply the one who has more information.

In fact, in a famous study by Adriaan de Groot, it was shown that expert chess players and weaker players look forward or compute lines approximately the same number of moves ahead, and that these players evaluate different positions, to a similar depth of moves, in roughly similar speeds.

In other words, via this finding, de Groot suggests that an expert?s advantage does not come from her ability to perform brute force calculations, but instead, from her body of chess knowledge. (While it has since been shown that some of de Groot?s claims aren?t as strong as originally thought, this general conclusion has held up).

In this way, chess expertise is mostly a function of the expert?s ability to identify, often at a glance, a huge corpus of chess positions and recall or derive the best move in each of these positions.

Thus, if I choose to train in traditional way, I would essentially need to find some magical way to learn and internalize as many chess positions as Magnus has in his over 20 years of playing chess. And this is why this month?s challenge seems a bit far-fetched.

But, what if I didn?t rely on a large knowledge base? What if I instead tried to create a set of heuristics that I could use to evaluate theoretically any chess position?

After all, this is how computers play chess (via positional computation), and they are much better than humans.

Could I invent a system that let?s me compute like a computer, but that can work with the processing speeds of my human brain?

There?s a 0% chance that I?m the first person to consider this kind of approach, so maybe not. But, there?s a lot of data out there (i.e. I have downloaded records of every competitive chess match Magnus has ever played), so perhaps something can be worked out.

Clearly, I can?t play by the normal chess rules if I want any shot of competing at the level of Magnus. (By ?normal chess rules? I mean the normal way people learn chess. If I could just bend the actual rules of the game, i.e. cheating, then this challenge would definitely be easier?).

I?m skeptical that some magical analytical chess method exists, but it?s worth thinking about it for a few days, and seeing if I can make any progress.

Hopefully, soon, I?ll be able to formulate an actual training approach for this month. Right now, I?m still floating around in the discovery phase.

As soon as I have an interesting training idea, I?ll be sure to share it.

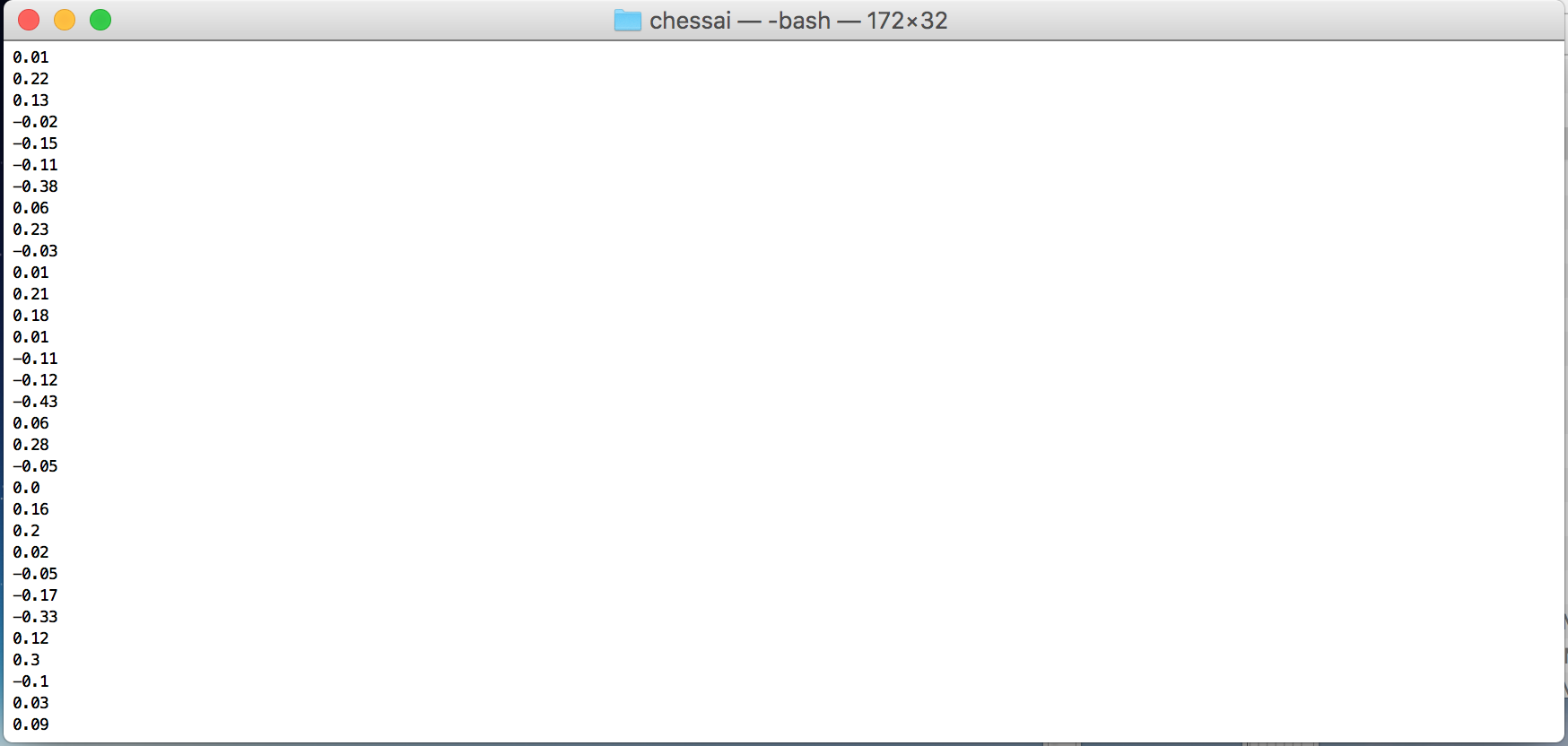

Yesterday, I wondered if I could sidestep the traditional approach to learning chess, and instead, develop a chessboard evaluation algorithm that I could perform in my head, effectively transforming me into a slow chess computer.

Today, I?ve thought through how I might do this, and will use this post as the first of a few posts to explore these ideas:

Method 1: The extreme memory challenge

Chess has a finite number of states, which means that the 32 chess pieces can only be arranged on the 64 squares in so many different ways.

Not only that, but for each of these states, there exists an associated best move.

Therefore, theoretically, I can become the best chess player in the world entirely through brute force, simply memorizing all possible pairs of chessboard configurations and associated best moves.

In fact, I already have an advantage: Back in November 2016, I became a grandmaster of memory, memorizing a shuffled deck of playing cards in one minute and 47 seconds. I can simply use the same mnemonic techniques to memorize all chessboard configurations.

If I wanted to be smart about it, I could even rank chessboard configurations by popularity (based on records of chess matches) or likelihood (based on the number of ways the configuration can be reached). By doing so, I can start by memorizing all the most popular chessboard configurations, and then, proceed toward the least likely ones.

This way, if I run out of time, at least I?ll have memorized the most useful pairs of configurations and best moves.

But, this ?running out of time? problem turns out to be a very big problem?

It?s estimated that there are on the order of 10? possible chessboard configurations. So, even if I could memorize one configuration every second, it would still take me slightly less than one trillion trillion trillion years (3.17 10? years) to memorize all possible configurations.

I?m trying to contain this challenge to about a month, so this is pushing it a bit.

Also, if I had one trillion trillion trillion years to spare, I might as well learn chess the traditional way. It would be much faster.

Method 2: Do it like a computer

Even computers don?t have the horsepower to use the brute force approach. Instead, they need to use a set of algorithms that attempt to approximate the brute force approach, but in much less time.

Here?s generally how a chess computer works?

For any given chessboard configuration, the chess computer will play every possible legal move, resulting in a number of new configurations. Then, for each of these new configurations, the computer will play every legal move again, and so on, branching into all the possible future states of the chessboard.

At some point, the computer will stop branching and will evaluate each of the resulting chessboards. During this evaluation, the computer uses an algorithm to compute the relative winning chances of white compared to black based on the particular board configuration.

Then, the computer takes all of these results back up the tree, and determines which first order move produced the most future states with strong relative winning chances.

Finally, the computer plays this best move in the actual game.

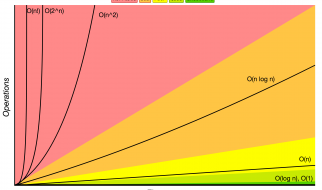

In other words, the computer?s strength is based on 1. How deep the computer travels in the tree, and 2. How accurate the computer?s evaluation algorithm is.

Interestingly, essentially all chess computers optimize for depth, and not evaluation. In particular, the makers of chess computers will try to design evaluation algorithms that are ?good enough?, but really fast.

By having an extremely fast evaluation algorithm, the chess program can handle many more chess configurations, and thus, can explore many more levels of the tree, which grows exponentially in size level by level.

So, if I wanted to exactly replicate a chess computer, but in my brain, I would need to be able to extrapolate out to, remember, and quickly evaluate thousands of chessboard configurations every time I wanted to make a move.

Because, for these kinds of calculations, I?m much slower than a computer, I again would simply run out of time. Not in the game. Not in the month. But in my lifetime many times over.

Thus, unlike computers, I can?t rely on depth ? which leaves only one option: Learn to compute evaluation algorithms in my head.

In other words, can I learn to perform an evaluation algorithm in my head, and, if I can, can this algorithm be effective without going past the first level of the tree?

The answer to the second question is yes: Recently, using deep learning, researchers have built effective chess computers that only evaluate one level deep and perform just as well as the best chess computers in the world (although they are about 10x slower).

Perhaps, I can figure out how to convert this evaluation algorithm into something that I can computational perform in my head a la some kind of elaborate mental math trick.

I have some ideas about how to do this, but I need to experiment a bit further before sharing. Hopefully, by tomorrow, I?ll have something to share.

Yesterday, I determined that my best chance of defeating Magnus is learning how to numerical compute chessboard evaluations in my head. Today, I will begin to describe how I plan to do this.

How a deep learning-based chess computer works

It?s probably useful to understand how a computer uses deep learning to evaluate chess positions, so let?s start here?

Bitboard representation

The first step is to convert the physical chessboard position into to a numerical representation that can be mathematically manipulated.

In every academic paper I?ve read, the chessboard is converted into its bitboard representation, which is a binary string of size 773. In other words, the chessboard is represented as a string of 773 1?s and 0’s.

Why 773?

Well, there are 64 squares on a chessboard. For each square, we want to encode which chess piece, if any, is on that square. There are 6 different chess piece types of 2 different colors. Therefore, we need 64 x 6 x 2 = 768 bits to represent the entire chess board. We then use five more bits to represent the side to move (one bit, representing White or Black) and the castling rights (four bits for White?s kingside, White?s queenside, Black?s kingside, and Black?s queenside).

Thus, we need 773 binary bits to represent a chessboard.

The simple evaluation algorithm

Once the chessboard is converted into its numerical form (which we will denote as the vector x), we want to perform some function on x, called f(x), so that we get a scalar (single number) output y that best approximates the winning chances of white (i.e. the evaluation of the board). Of course, y can be a negative number, signifying that the position is better for black.

The simplest version of this function y = f(x) would be y = wx, where w is a vector of 773 weights that, when multiplied with the bitboard vector, results in a single number (which is effectively some weighted average of each of the bitboard bits).

This may be a bit confusing, so let?s understand what this function means for actual chess pieces on an actual chessboard?

This function is basically saying ?If a white queen is on square d4 of a chessboard, then add or subtract some corresponding amount from the total evaluation score. If a black king is on square c2, then add or subtract some other corresponding amount from the total evaluation score? and so on for all permutations of piece types of both colors and squares on the chessboard.

By the way, I haven?t mentioned it yet, so now is a good time to do so: On an actual chessboard, each square can be referred to by a letter from a to h and a number from 1 to 8. This is called algebraic chess notation. It?s not super important to fully understand how this notation is used right now, but this is what I mean by ?d4? and ?c2? above.

Anyway, this evaluation function, as described above, is clearly too crude to correctly approximate the true evaluation function (The true evaluation function is the just the functional form of the brute force approach. Yesterday, we determined this approach to be impossible for both computers and humans).

So, we need something a little bit more sophisticated.

The deep learning approach

Our function from above mapped the input bits from the bitboard representation directly to a final evaluation, which ends up being too simple.

But, we can create a more sophisticated function by adding a hidden layer in between the input x and the output y. Let?s call this hidden layer h.

So now, we have two functions: One function, f(x) = h, that maps the input bitboard representation to an intermediate vector called h (which can theoretically be of any size), and a second function, f(h) = y, that maps the vector h to the output evaluation y.

If this is not sophisticated enough, we can keep adding more intermediate vectors, h, h, h, etc., and the functions that map from intermediate step to intermediate step, until it is. Each intermediate step is called a layer and, the more layers we have, the deeper our neural network is. This is where the term ?deep learning? comes from.

In some of the papers I read, the evaluation functions only had three layers, and in other papers, the evaluation function had nine layers.

Obviously, the more layers, the more computations, but also, the more accurate the evaluation (theoretically).

Number of mathematical operations.

Interestingly, even though these evaluation functions are sophisticated, the underlying mathematical operations are very simple, only requiring addition and multiplication. These are both operations that I can perform in my head, at scale, which I?ll discuss in much greater depth in the next couple of days.

However, even if the operations themselves are simple, I would still need to perform thousands of operations to execute these evaluation functions in my brain, which could still take a lot of time.

Let?s figure just how many operations I would need to perform, and just how long that would take me.

Again, suspend your disbelief about my ability to perform and keep track of all these operations. I?ll explain how I plan to do this soon.

How much computation is required?

Counting the operations

Let?s say that I have an evaluation function that contains only one hidden layer, which has a width of 2048. This means that the function from inputs x to the internal vector h, f(x) = h, converts a 773-digit vector into a 2048-digit vector, by multiply x by a matrix of size 773 x 2048. Let?s call this matrix W. (By the way, I picked this setup because the chess computer, Deep Pink, uses intermediate layers of size 2048).

To execute f in this way requires 773 x 2048 = 1,583,104 multiplications and (773?1) x 2048 = 1,581,056 additions, totaling to 3,164,160 operations.

Then, f converts h to the output scalar y, by multiplying h by a 2048-digit vector, called w, which requires 2048 multiplications and 2047 additions, or 4095 total operations.

Thus, this evaluation function would require that I perform 3,168,255 total operations.

Counting the memory capacity required

To perform this mental calculation, not only will I need to execute these operations, but I?ll also need to have enough memory capacity.

In particular, I?ll have needed to pre-memorize all the values of matrix W, which is 1,583,104 numbers, and vector w, which is 2048 numbers. I would also need to remember h while computing f, so I can use the result to compute f, which requires that I remember another 2048 numbers.

Let?s now convert this memorization effort to specific memory operations.

For the 1,583,104 weights of W and the 2048 weights of w, I would only require a read operation from my memory for each (during game-time computation).

For the 2048 digits of h, I would require both a write operation and a read operation for each.

Thus, I would require 1,587,200 read operations and 2048 write operations, or 1,589,248 in total.

How long would this take?

We now know that I would need to execute 3,168,255 mathematical operations and 1,589,248 memory operations to evaluation a given chess position. How long exactly would this take?

This of course depends on the size of the multiplication and divisions, and the sizes of the numbers being stored in memory. I?ll talk more about this sizing soon, but for now, I?ll just provide my estimates.

I estimate that I could perform one mathematical operation in 3 seconds and one memory operation in 1 second. Thus, I could evaluate one chess position in 11,094,013 seconds or a little over four months.

Clearly, this is a long time (and doesn?t factor in the fact that I can?t, as a human, process continuously for 4 months), but we?re getting closer.

Of course, in a given chess game, I would need to make more than one evaluation. Since I?m a pretty novice player, I estimate that I would need to evaluate ~10?15 options per move.

Since the average chess game is estimated to be 40 moves, this would be about 500 evaluations per game.

Therefore, to play an entire chess game using this method, I would need 2,000 months or 167 years to play the game.

Of course, this is still problematic, but way less problematic than yesterday?s conclusion of one trillion trillion trillion years. In fact, I?m getting closer to an approach that I could actually execute in my lifetime (let?s say I have 75 years left to live).

The next step

I?ve made two assumptions above, one of which is good news for me and one of which is bad news for me.

First, the bad news: It would take me 167 years to play one chess game, assuming that my one-level deep evaluation function was sufficiently good. I suspect that one level isn?t enough, and that at least two or three levels would be needed, if not more.

The good news is that I?m not a computer, which means there is no reason I need to use bitboard representation as my starting point, potentially allowing me to reduce the size of the problem substantially.

Computers like 1?s and 0?s, and don?t care too much about ?big numbers? like 773, which is why bitboard representation is optimal for computers.

But, for me, I can deal with any two- or three-digit number just as well as I can deal with a 1 or 0 from a memory perspective and almost as well as I can from a computational perspective.

I think I could squash my chessboard representation to under 40 digits, which would significantly reduce the number of operations necessary (although, may slightly increase the operation time).

In the next few days, I?ll discuss how I plan to reduce the size of the problem and optimize the evaluation function for my human brain, so that I can perform all the necessary evaluations in a reasonable timeframe.

Last night, I climbed into bed, ready to go to sleep. As soon as I closed my eyes, I realized that I made a major mistake in my calculations from yesterday.

Well, technically I didn?t make a mistake. The chessboard evaluation algorithm I described yesterday does require 3,168,255 math operations and 1,589,248 memory operations. However, I failed to recognize that most of these operations are irrelevant.

Let me explain?

If you haven?t yet read yesterday?s post, it would be very helpful for you to read that first. Read it here.

Addressing my mistake

Yesterday, I introduced bitboard representation, which is a way to completely describe the state of a chessboard via 773 1?s and 0?s. Bitboard representation is the input vector to my evaluation function.

When calculating the number of math operations this evaluation function would require, I overlooked the fact that only a maximum of 37 digits out of the 773 in bitboard representation can be 1?s, and the rest are 0?s.

This is important for two reason:

- The function, f(x), that converts the input bitboard representation x into the hidden vector h, doesn?t actually require any multiplication operations, since either I?m multiplying by 0 (in which case, the term can be completely ignored), or I?m multiplying by 1 (in which case, the original term can be used unchanged). In this way, all 1,583,104 multiplication operations that I estimated yesterday are no longer necessary.

- f(x) only requires addition operations between all the 1?s in the bitboard representation, which we?ve now correctly capped at 37. Therefore, instead of 1,581,056 addition operation as estimated yesterday, the algorithm only requires 36 x 2048 = 73,728 operations.

f still requires the same 4095 operations, which means that, in total, the evaluation algorithm only requires 77,823 math operations.

This is a 40x improvement from yesterday.

Additionally, the memory operations scale down in the same way: For f, I only need (37 x 2048) + 2048 =77,824 read operations, and 2048 write operations, totaling to 79,872 memory operations.

Therefore, in total, the evaluation algorithm described yesterday actually only requires 157,695 total operations.

Computing the new time requirements

If I again assume that I can perform one mathematical operation in 3 seconds and one memory operation in 1 second, I would be able to evaluate one chess position in 313,341 seconds or 3.6 days, which is down considerably from yesterday?s four months.

Still, if we assume that I would need to execute 500 evaluations per game, I would need 3.6 days x 500 evaluations = 5 years to play one game.

5 years is still too long, but at least now I can complete one game of (computationally beautiful) chess in my lifetime.

But, I think I can do better?

Using ?Threshold Search?

Yesterday, I assumed that I would need to evaluate 10?15 options per move in order to find the optimal option. In other words, I would perform 15 evaluations, determine which move led to the greatest evaluation score, and then play that move in the game.

However, what if it?s unnecessary to find the absolute best move? What if it?s only necessary to find a move with an evaluation above a certain threshold?

Then, for a given move, I can stop evaluating once I find the first board position that surpasses this threshold. Theoretically, I could pick this board position on my first try (although, I wouldn?t be able to do this consistently. Otherwise, I would just be the world?s greatest chess player).

Though, I estimate I could find a threshold-passing move in 2.5 evaluations on average.

Therefore, if I?m able to implement this thresholding into my evaluation algorithm, I can reduce the number of evaluations from 500 to 100.

But, I can still do better?

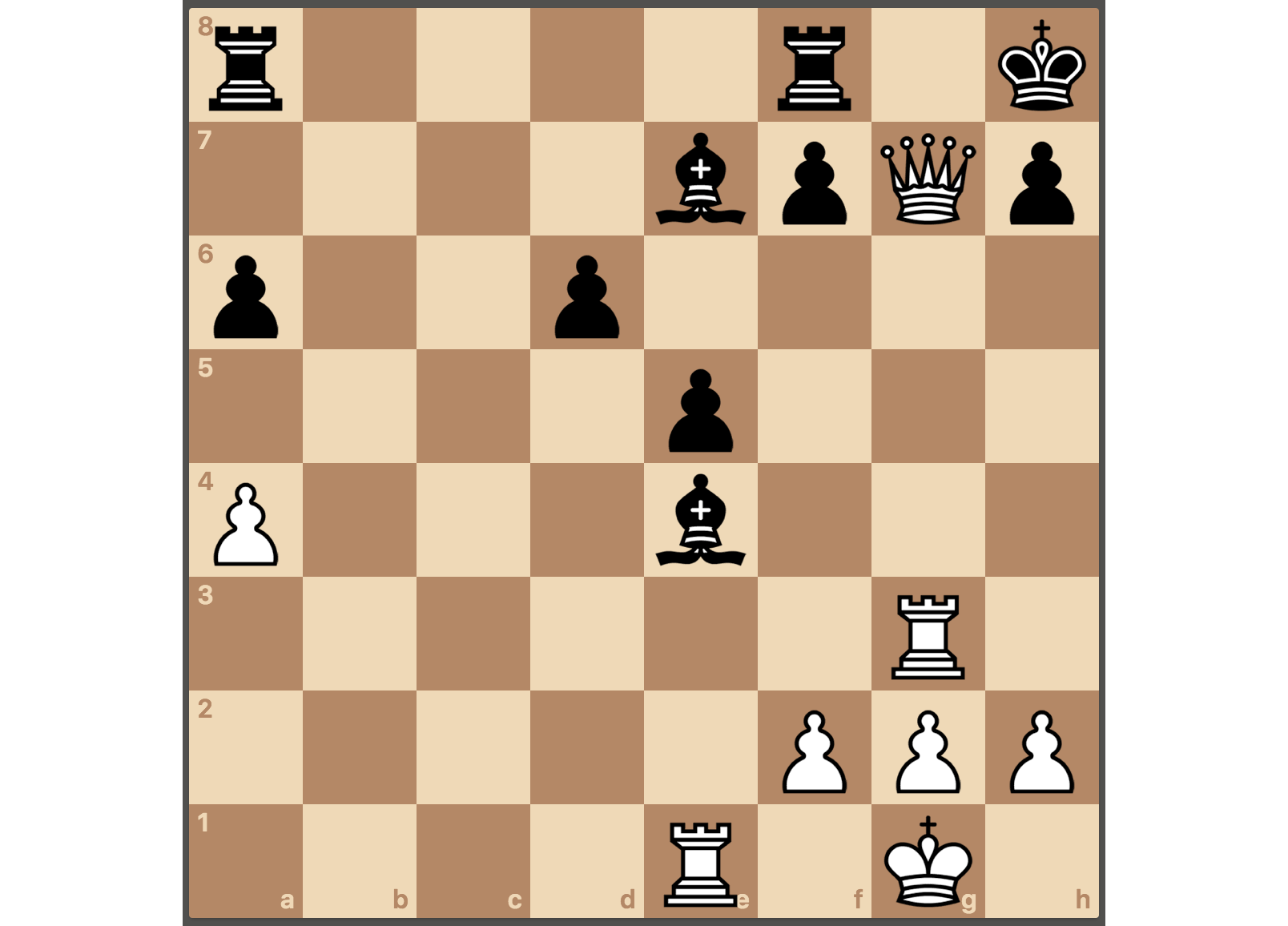

Playing the opening

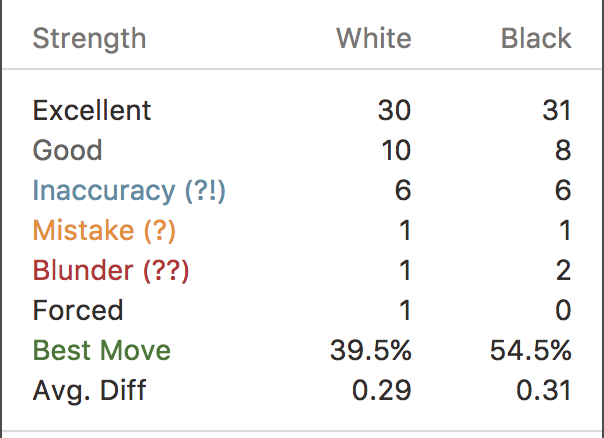

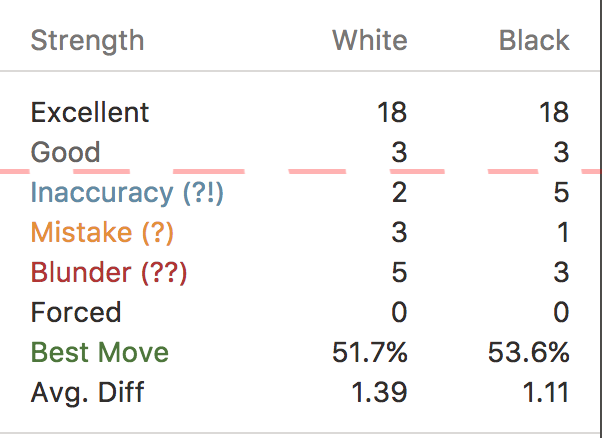

On Chess.com, I?m able to run my games through a real chess computer that analyzes each of my moves. The computer categorizes the moves into ?Excellent? (for when I play the absolute best move), ?Good? (for when I play a move above the threshold, but not the best move), and ?Inaccuracy?, ?Mistake?, and ?Blunder? (for when I screw up).

You?ll notice that 8 out of my 49 moves were below the threshold (I was playing White).

Interestingly though, going through all of my games, I seem to play moves exclusively in the Excellent and Good categories until about move 12?15, at which point, the game becomes too complex and I?m in trouble.

I?ve also found that towards the end of the game, I make very few below-threshold moves.

Based on this data, let?s say that I only need to perform my mental evaluations for 24 out of the 40 moves found in an average game.

Thus, in one game, I?d only require 60 total evaluations, and at 3.6 days per evaluation, I could complete a full game in 7 months.

This is getting closer, but I can still do better?

Finding reductions via ?Update Operations?

Right now, every time I?m doing an evaluation, I?m starting the computation over from scratch, which isn?t actually necessary, and far from most efficient.

Particularly, the hidden layer h, the output of f (which is the most computationally costly step of the algorithm), is nearly identical to the previous h computed in the previous evaluation.

In fact, if the new evaluation is the first for a particular move, then h only differs by the move the opposing color made, and the move under consideration. (Keep in mind, because I?m using the thresholding approach, the move corresponding to the last evaluation I computed, and thus, the last h I computed, was actually executed in the game, so I have an accurate starting point in my working memory.)

Thus, in this case, each of the 2048 values of h need to be updated with four operations: One corresponding to the move made by the opposition, one to cancel out where the opposition?s piece was prior to the move, one corresponding to the move under consideration, and one to cancel out where this piece was prior to consideration.

Thus, to update h, only 4 x 2048 = 8192 math operations (additions) are necessary.

If I prepare a certain opening as White, and Magnus responds with the theoretically determined moves, I can come into the game having pre-memorized the first h vector I?ll need, thus only ever having to perform updates.

Of course, if the game veers off script, I?ll need to execute a full evaluation for the first move, but never after that.

Thus, in total, since 4095 operations are still required to convert h to the output y, 8192 + 4095 = 12,287 math operations are needed for an update evaluation.

The memory capacity required also scales down with this update approach, so that only 8192 x 2 + 4096 = 20,480 memory operations are needed per evaluation.

If the evaluation is the second, third, etc. for a particular move, then h only differs by the move previously under consideration and the move currently under consideration. Thus, again, each of the 2048 values of h need to be updated with four operations.

So, this kind of update also requires 12,287 math operations and 20,480 memory operations.

Again, assuming that I can perform one mathematical operation in 3 seconds and one memory operation in 1 second, using this updating approach, I would be able to evaluate one chess position in 57, 341 seconds or 16 hours.

This means that I could complete one full game of chess in 16 hours x 60 evaluations = 40 days.

Clearly, more optimizations are still required, but things are looking better.

In fact, we are starting to get into the range where I could do a David Blaine-style stunt, where I spend 40 days in a jail cell computing chess moves for a one-game extended exhibition.

I think I?d prefer to continue optimizing my algorithm instead, but I?d likely have a price if a very generous sponsor came along. After all, it would be a pretty fascinating human experiment.

Still, tomorrow, I?ll continue to look for ways to improve my computation time.

Hopefully, in the next two days, I can finish all the theoretical planning, have a clear plan in mind, and begin the computer work necessary to generate the actual algorithm.

Yesterday, through some further optimizations, I was able to decrease the run time of my chess evaluation algorithm to 16 hours per evaluation, thus requiring about 40 days for an entire game.

While I?m excited about where this is headed, there?s a problem: Even if this algorithm can be executed in a reasonable time, learning it would be nearly impossible, requiring that I pre-memorize about 1.6 million algorithm parameters (i.e. random numbers) before I even complete my first evaluation.

That?s just too many parameters to try to memorize.

The good news is that it?s probably not necessary to have so many parameters. In fact, the self-driving car algorithm that I built during May, which was based on NVIDIA?s Autopilot model, only required 250,000 parameters.

With this in mind, I suspect that a good chess algorithm only requires about 20,000?25,000 parameters ? an order of magnitude less than the self-driving car, given that a self-driving car needs to make sense of much less structured and much less contained data.

Assuming this is sufficient, I?m completely prepared to pre-memorize 20,000 parameters.

To put this in perspective, in October 2015, Suresh Kumar Sharma memorized and recited 70,030 digits of the number pi.

Thus, memorizing 20,000 random numbers is fully in the possible human range. (I will be truncating the parameters, which span from values of 0 to 1, at two digits, which should maintain the integrity of the function, while also capping the number of digits I need to memorize to 40,000).

Reducing the required number of parameters

In my algorithm from yesterday, most of the parameters came from the multiplication of the 773 digits of bitboard representation with the 2048 rows of the hidden vector h.

Thus, in order to reduce the number of necessary parameters, I can either condense bitboard representation, or choose a smaller width for the hidden layer.

At first, my instinct was to condense bitboard representation down to 37 digits, where the positions in the vector corresponded to particular chess pieces and the numbers at each of these spots corresponded to the particular squares on the board. For example, the first spot in the vector could correspond to the White king, and the value in this spot could span from 1 to 64.

I think an idea like this is worth experimenting with (in the future), but my instinct is that this particular representation is creating too many dependencies/correlations between variables, resulting in a much less accurate evaluation function.

Thus, my best option is to reduce the width of the hidden layer.

I?ve been using 2048 as the width of the hidden layer, which I realized from the beginning is quite large, but I tried to carry it through for as long as possible as a way to force myself to find other large optimizations.

I hypothesize I can create a chess algorithm that is good enough with two hidden layers of width 16, which would require that I memorize 12,640 parameters.

Of course, I need to practically validate this hypothesis by creating and running the algorithm, but, for now, let?s assume this algorithm will work. Even if it works, it may not be time effective, in which case it?s not worth actually building.

So, let?s validate this first?

The new hypothesized algorithm

Unlike the algorithm from the past two days, my hypothesized algorithm has not one, but two hidden layers, which should provide our model with one extra level of abstraction to work with.

This algorithm takes in the 773 digits of the bitboard representation, converts these 773 digits into 16 digits (tuned by 12,368 parameters), converts these 16 digits into another set of 16 digits (tuned by 256 parameters), and outputs an evaluation (tuned by 16 parameters).

This algorithm would require (36 x 16) + (16 x 16) + (15 x 16)+ 16 + 15 = 1103 math operations, (36 x 16) + (16 x 16) + 16 = 848 memory read operations, and 16 + 16 = 32 memory write operations.

Thus, still assuming a 3-second execution time per math operation and a 1-second execution time per memory operation, one evaluation would require (3 x 1,103) + 880 = 4,189 seconds = 1.2 hours to execute.

Of course, as explained yesterday, most evaluations would only be updates on previous evaluations. Using this new algorithm, an update evaluation would require (4 x 16) + (16 x 16) + (15 x 16) + (16 + 15) = 591 math operations, 16 + (4 x 16) + 16 + (16 x 16) + 16 = 368 memory read operations, and 16 + 16 = 32 memory write operations.

Therefore, an update evaluation would require (3 x 591) + 400= 2,173 seconds = 36 minutes to execute.

So, a full game can be played in 36 minutes x 60 evaluation = 36 hours = 1.5 days.

This is still a little long for complete use during a standard chess match, but I could imagine a chess grandmaster using one or two evaluations of this type during a game to calculate a particularly challenging position.

This new algorithm has an execution time of 1.5 days per game, which is almost acceptable, and requires memorizing 12,640 parameters, which is very much in the human range.

In other words, with a few more optimizations, this algorithm is actually playable, assuming that the structure has enough built-in connections to properly evaluation chess positions.

If it does, I might have a chance. If not, I?m likely in trouble.

Time to start testing this assumption?

For the past four days, I?ve been working on a new way to master chess: Constructing and learning a chess algorithm that can be mentally executed (like a computer) to numerically evaluate chess positions.

I still have a lot more work to do on this and a lot more to share.

But, today, I took a break from this work to play some normal games of chess on Chess.com. After all, the better I can evaluate positions without the algorithm, the more effective I will be at using it.

Despite my break, I?m fully committed to my algorithmic approach to chess mastery, as it is the clearest path to rapid chess improvement.

Nevertheless, in the past few days, some of my friends have questioned whether or not I?m actually ?learning chess?. And in the traditional sense, I?m not.

But, that?s only in the traditional sense.

The goal, at the end of the day, is to play a competitive game of chess. The path there shouldn?t and doesn?t matter.

Just because everyone else takes one particular path doesn?t mean that this path is the only path or the best path. Sometimes, it?s worth questioning why the standard path is standard and if it?s the only way to a particular destination.

Of course, it?s still unclear whether or not my algorithmic approach will work, but it?s definitely the path most worth exploring.

Tomorrow, I?ll continue working on and writing about this new approach to learning chess. I?m excited to see how far I can take it?

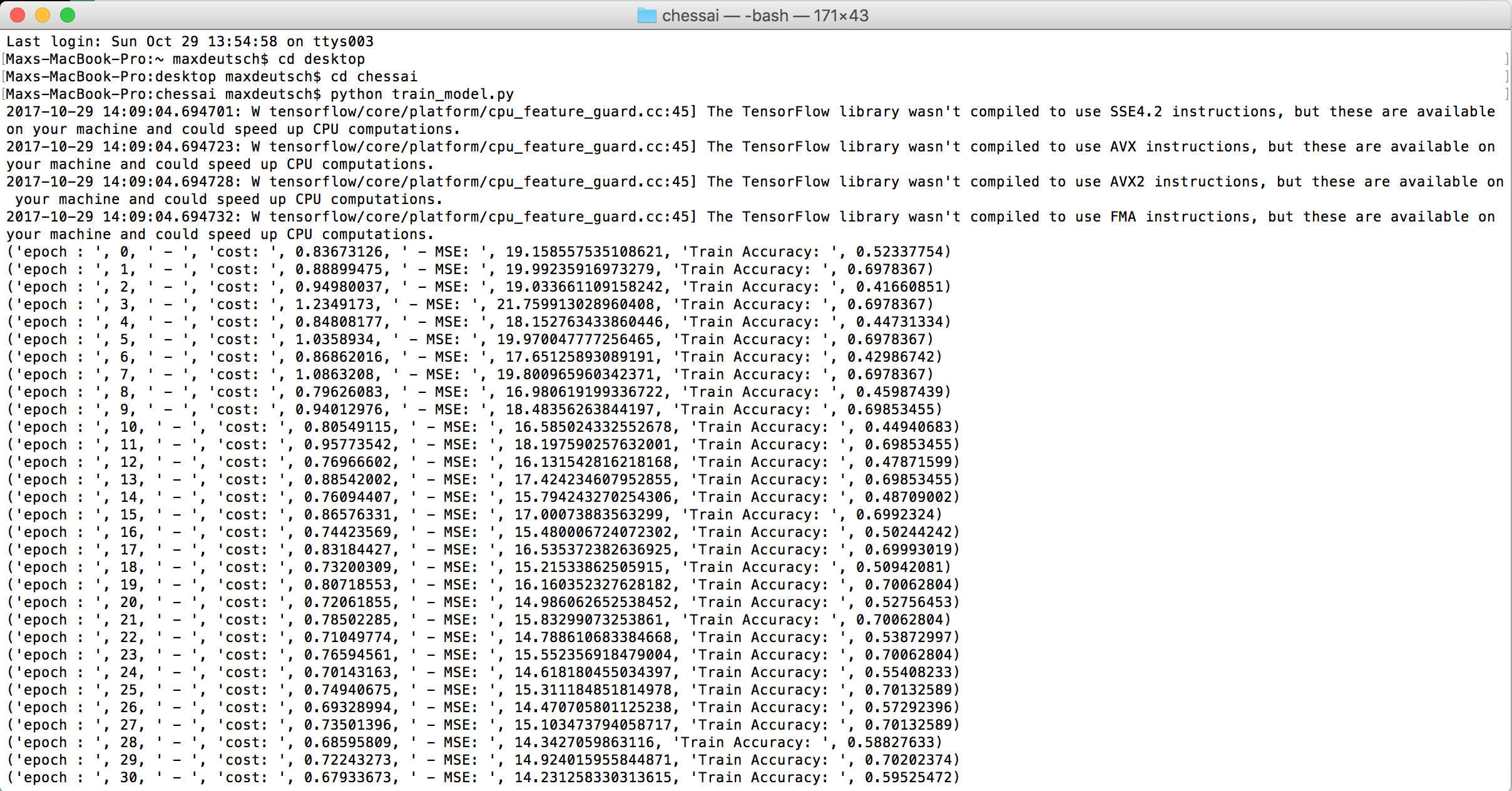

Today, based on analysis from the past few days, I started building my chess algorithm. The hope is that I can build an algorithm that is both effective (i.e. plays at a grandmaster level), but also learnable, so that I can execute it in my brain.

As I started working, I realized very quickly that I first need to work out exactly how I plan to perform all of my mental calculations. This mental process will inform how I structure the data in my deep learning model.

Therefore, I?ve used this post as a way to think through and document exactly this process. In particular, I?m going to walk through how I plan to mentally convert any given chessboard into a numerical evaluation of the position.

The mental mechanics of my algorithm

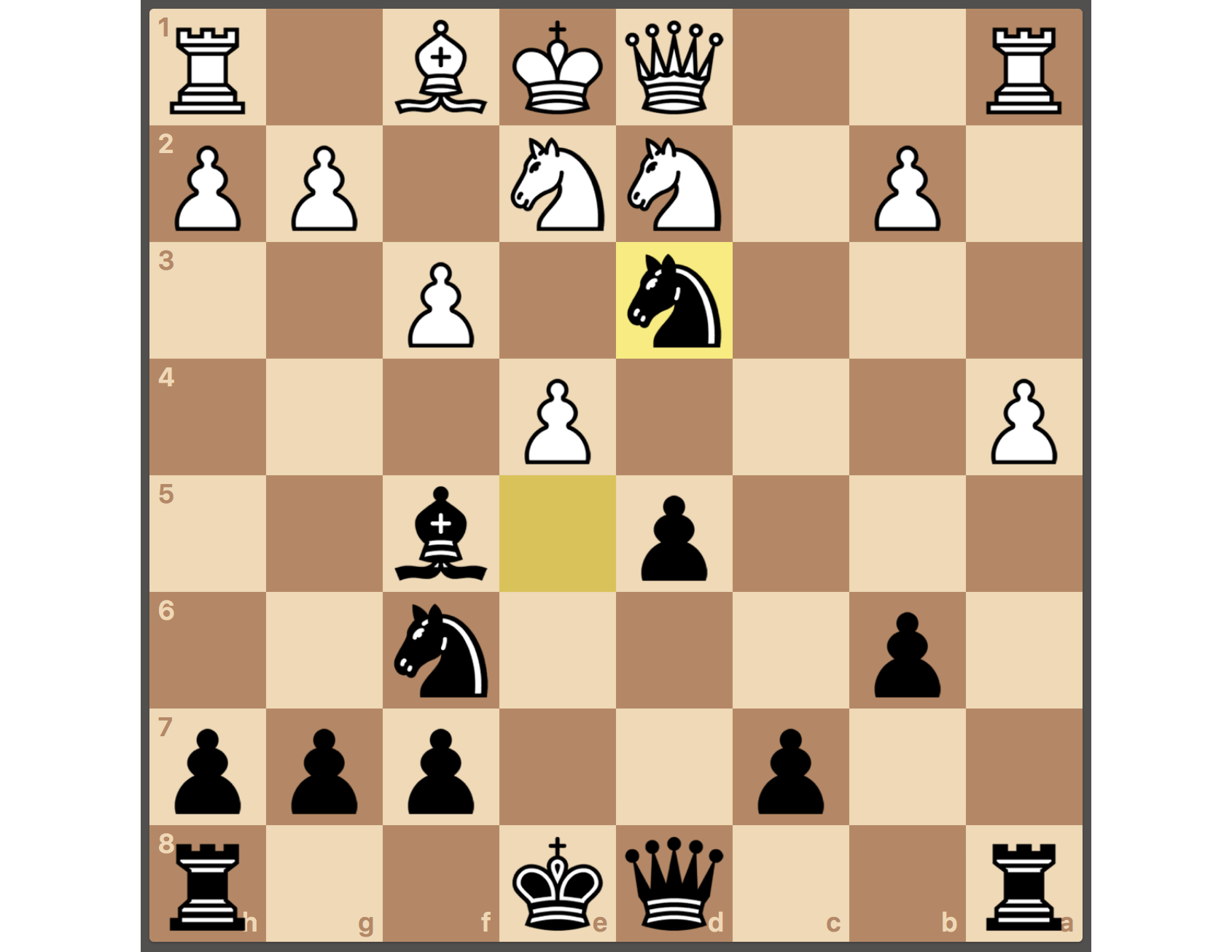

First, I look down at the board:

Starting in the a column, I find the first piece. In this case, it?s on a1, and is a Rook. This Rook has a corresponding algorithmic parameter (i.e. a number between -1 and 1, rounded to two places after the decimal point) that I add to the running total of the first sub-calculation. Since this is the first piece on the board, the Rook?s value is added to zero.

The algorithmic parameter for the Rook can be found in my memory, using the following mental structure:

In my memory, I have eight mind palaces that correspond to each of the lettered columns from a to h. A mind palace, as explained during November?s card memorization challenge, is a fixed, visualizable location to which I can attach memories or information. For example, one such location could be my childhood house.

In each mind palace, there are nines rooms, one corresponding to each row from 1 to 8, and an extra room to store the data associated with castling rights. For example, one such room could be my bedroom in my childhood house.

In the first eight rooms, there are six landmarks. For example, one such landmark could be the desk in my bedroom in my childhood house. In the ninth room, there are only four landmarks.

Attached to each landmark are two 2-digit numbers that represent the relevant algorithmic parameters.

The first three landmarks correspond to the White pieces and the second three landmarks correspond to the Black pieces. The first landmark (of each set of three) is used for the algorithm parameters associated with the King and Queen, the second landmark is used for the Rook and Bishop, and the third landmark is used for the Knight and Pawns.

So, when looking at the board above, I start by mentally traveling to the first mind palace (column a), to the first room (row 1), to the second landmark (for the White Rook and Bishop), and to the first 2-digit number (for the White Rook).

I take this 2-digit number and add it to the running total.

Next, I move up the column until I hit the next piece. In this case, there is a White pawn on a2. So, staying in the a mind palace, I mentally navigate to third landmark in the second room, and select the second 2-digit number, which I then add to the running total.

I next move on to the Black pawn on a6, and so on, until I?ve worked my way through the entire board. Then, I enter the ninth room, which provides the 2-digit algorithmic parameters associated with castling rights.

At this point, I take the summed total and store it in my separate 16-landmark mind palace, which holds the values for the first internal output (i.e. the values for the h vector of the first hidden layer).

This completes the first pass of the chessboard, outputting the first of 16 values for h. Thus, I still need to complete fifteen more passes, which repeat the process, but each using a completely new set of mind palaces.

In other words, I actually need 16 distinct mind universes, in which each universe has eight mind palaces of eight rooms of six landmarks and one room of four landmarks.

After completing the process 16 times, and storing each outputted value in the h mind palace, I can move onto the second hidden layer, converting h into h by passing the values of h through a matrix of 256 algorithmic parameters.

These parameters are stored in a single mind palace of 16 rooms. Each room has eight landmarks and each landmark holds two 2-digit parameters.

Unlike the conversion from the chessboard to h, for the conversion of h to h, I almost certainly need to use all 256 parameters in row (unless I implement some kind of squashing function between h and h, reducing some of the hvalues to zero? I?ll explore this option later).

Thus, I start by taking the first value of h and multiplying it (via mental math cross multiplication) by the first value in the first room of the h mind palace. Then, I take the second value of h, multiply it to the second value in the first room, and add the result to the previous result. I continue in this way until I finish multiplying and adding all the terms in the first room, at which point I store the total as the first value of h.

I proceed with the rest of the room, until all the values of h are computed.

Finally, I need to convert h to a single number that represents the numerical evaluation of the given chess position.

To do this, I access one final room in the h mind palace, which has eight landmarks each holding two 2-digit numbers. I multiply the first number in this mind palace with the first value of h, the second number with the second value of h, and so on, adding the results along the way.

In the end, I?m left with a single sum, which should closely approximate the true evaluation of the chess position.

If this number is greater than the determined threshold value, then I should play the move corresponding to this calculation. If not, then I need to start all over with a different potential move in mind.

Some notes:

- I still need to work out how I plan to store negative numbers in my visual memory.

- Currently, I?ve only created one mind universe, so, assuming I can computationally validate my algorithmic approach, I?ll need to create 15 more universes. I?ll also need to create a higher level naming scheme or mnemonic system for the universes that I can use to keep them straight in my brain.

- There is a potential chance that I will need more layers, fewer layers, or different sized layers in my deep learning model. In any of these cases, I?ll need to repeat or remove the procedures, as described above, accordingly.

With the details of my mental evaluation algorithm worked out, I have everything I need to been the computational part of the process.

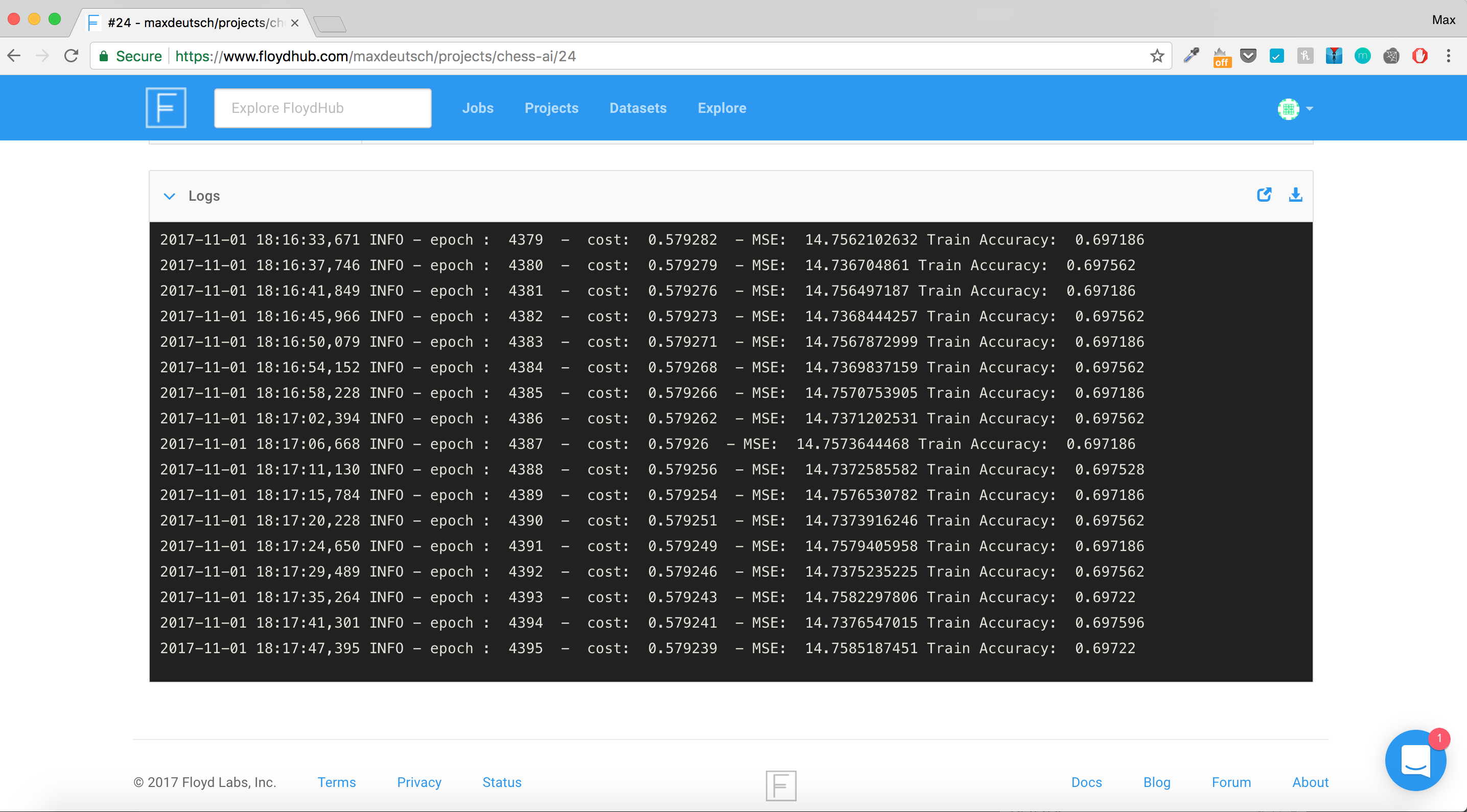

Today, I started writing a few lines of code, which will be used to generate my chess algorithm. While doing so, I started daydreaming about how this algorithmic approach could change the game of chess if it actually works.

In particular, I tried to answer the question: ?If this works, what?s the ideal outcome??.

There are two parts to this answer?

1. Execution speed

The first part has to do with the execution speed of this method, which I believe can be reduced to less than 10 minutes per evaluation with the appropriate practice.

As a comparable, Alex Mullen, the #1-rated memory athlete in the world, memorized 520 random digits in five minutes. While, one full chess evaluation requires manipulating 816 digits if all the pieces are on the board and 560 digits if half the pieces are on the board.

While these tasks aren?t exactly the same, in my experience, writing numbers to memory takes just about the same times as reading numbers from memory and subsequently adding them.

To be conservative though, I?ve padded Alex?s five minute time, predicting that an expert practitioner at mental chess calculations could execute one chess evaluation in 10 minutes.

At 60 evaluations per game (which is what I predict for myself), an entire game could be completed in 10 hours, which, at least in my eyes, is completely reasonable. Many of the games in this World Chess Championship last for six, seven, or eight hours.

Of course, if a more experienced player can more intelligently implement the algorithm, I suspect she can cut down the number of evaluations per game to around 30, shortening the game to a fairly standard five hours for a classical game.

2. Widespread adoption

I?m not sure if this computational approach to chess will ever catch on as the exclusive strategy of elite players (although, I guess it?s possible), but I can imagine elite players augmenting their games with the occasionally mental algorithmic calculation.

In fact, I wonder if a strong player (2200?2600), who augments her game with this approach, could become a serious threat to the top players in the world.

I?d like to think that, if this works, it?s causes a bit of a stir in the chess world.

It might seem that this approach tarnishes the sanctity of the game, but chess players have been using machine computation for years as part of their training approach. (Chess players use computers to work out the best theoretical lines in certain positions, and then memorize these lines in a rote or intuitive fashion).

Anyway, if this approach to chess does catch on, I might as well give it a catchy name?

At first, I was thinking about something boring like ?Human Computational Chess?, but I?m just going to commit to the more self-serving approach:

Introducing Max Chess: A style of chess play that uses a combination of intuitive moves and mental algorithmic computation. These mental computations are known as Max Calculations.

It?s probably cooler if someone else names a particular technique after you, but I think the cool ship has already sailed, so I?m just going to go with it.

Now, assuming Max Chess even works, let?s see if it catches on?

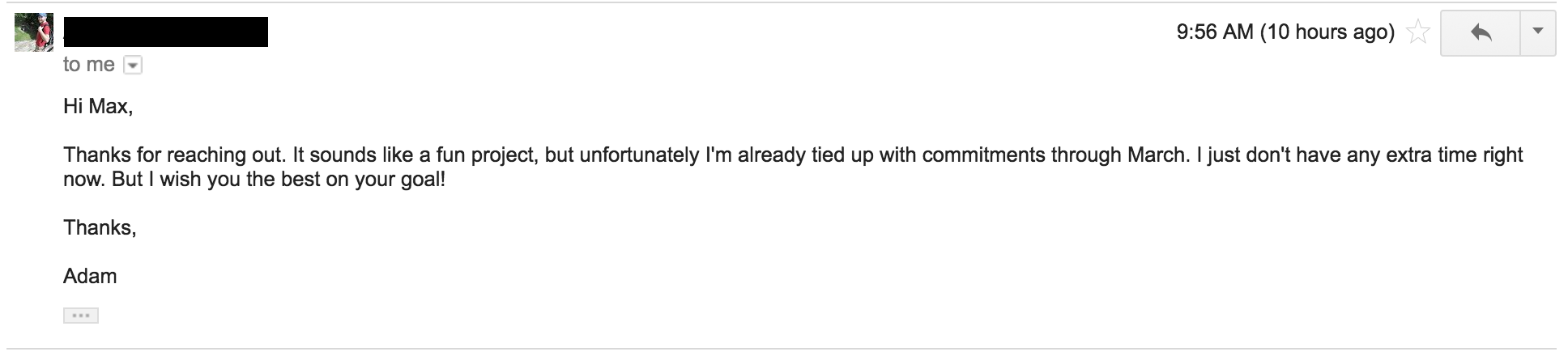

Today, to accelerate my progress, I thought it might be a good idea to solicit some outside help from someone with a bit more chess AI experience.

So, I shot out a bunch of emails this morning, and surprisingly, received back many replies very quickly. Unfortunately, though, all the replies took the same form?

In other words, no one was interested in collaborating on this project ? or, at least no one was interested in collaborating on this project starting immediately and at full-intensity.

This is understandable.

If I knew that I was going down this algorithmic path at the beginning of the month, and really wanted a collaborator, I should have planned much further in advance.

But, in a way, I?m glad I was rejected. Because now, I have no excuse but to figure out everything on my own.

Ultimately, the purpose of this project is to use time constraints and ambitious goals as motivation to level up my skills, so that?s exactly what I?ll do. Since there?s going to be a little bit of an extra learning curve (given that I?ve never played around with chess data or chess AI previously), this challenge is definitely going to come down to the wire.

But, this time pressure is what makes the project exciting, so here I go? Time to become a master of chess AI.

Side note: To completely negate everything I?ve said above, if you do think you have the relevant experience and are interested in helping out, leave a comment and we?ll figure something out. Always happy to learn from someone else when an option. (And of course always happy to figure it out on my own when it?s not).

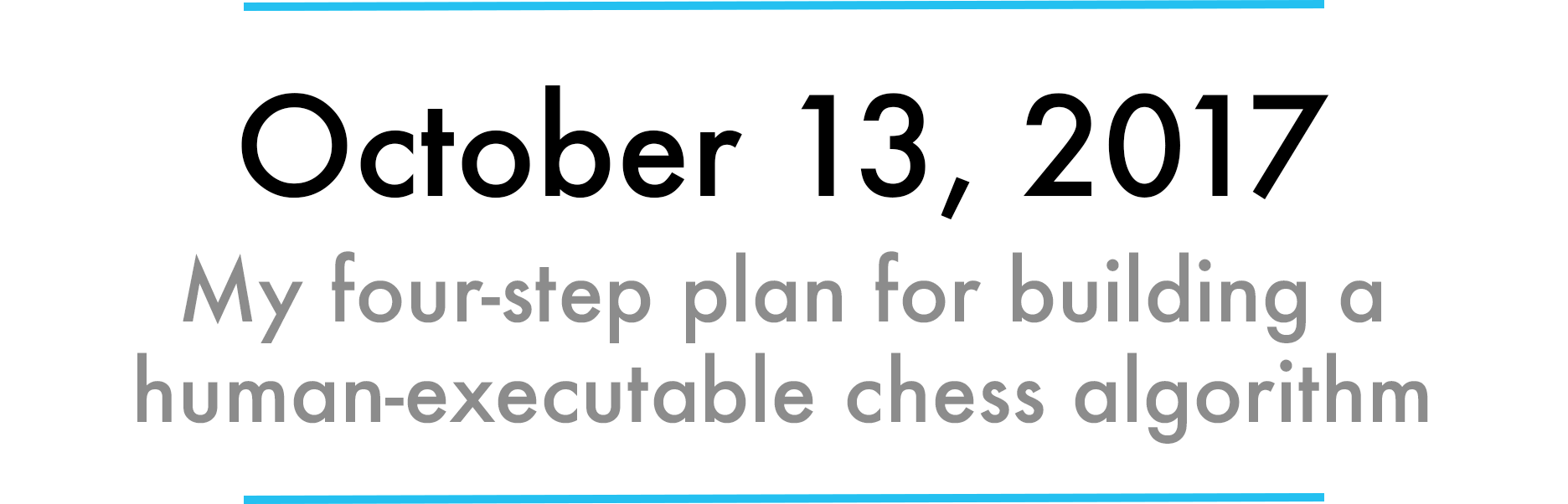

Yesterday, after a failed attempt to recruit some help, I realized that I need to proceed on my own. In particular, I need to figure out how to actually produce my (currently) mythical, only theoretical evaluation algorithm via some sort of computer wizardry.

Well, actually, it?s not wizardry ? it just seems that way because I haven?t formalized a tangible approach yet. So, let?s do that in this post.

There are four steps to creating the ultimate Max Chess algorithm:

Step 1: Creating the dataset

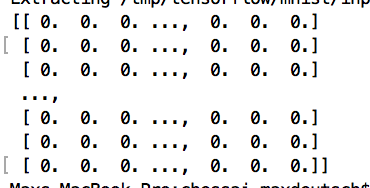

In order to train a deep learning model (i.e. have my computer generate an evaluation algorithm), I need a large dataset from which my computer can learn. Specifically, this dataset needs to contain pairs of bitboard representations of chess positions as inputs and the numerical evaluations that correspond to these positions as outputs.

In other words, the dataset contains both the inputs and the outputs, and I?m asking my computer to best approximate the function that maps these inputs to outputs.

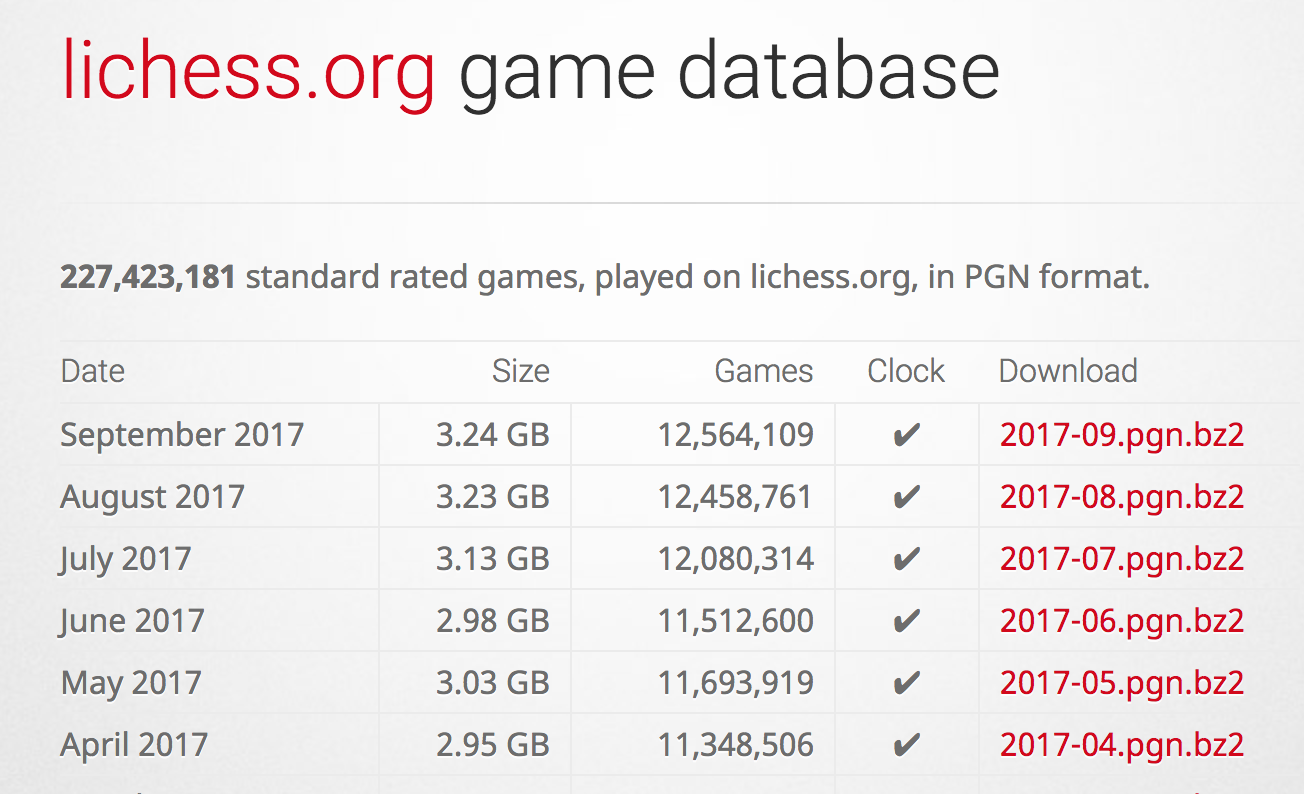

There seems to be plenty of chess games online that I can download, including 2,600 games that Magnus has played on the record.

The challenge for this part of the process will be A. Converting the data into my modified bitboard representation (so the data maps one-to-one with my mental process) and B. Generating evaluations for each of the bitboard representations using the open-source chess engine Stockfish (which has a chess rating of around 3100).

Step 2: Creating the model

The next step is to create the deep learning model. Basically, this is the framework for the algorithm to built around.

The model is meant to describe the form that a function (between inputs and outputs in the dataset) should take. However, before training the model, the specifics of the function are unknown. The model simply lays out the functional form.

Theoretical, this model shouldn?t have to deviate too far from already well-studied deep learning models.

I should be able to implement the model in Tensorflow (Google?s library for machine learning) without too much difficulty.

Step 3: Training the model on the dataset

Once the model is built, I need to feed it the entire dataset and let my computer go to work trying to generate the algorithm. This algorithm will map inputs to outputs within the framework of the model, and will be tuned with the algorithm parameters that I?ll need to memorize.

The actual training process should be fairly hands off. The hard part is usually getting the model and the dataset to play nicely with each other, which is honestly where I spent 70% of time during May?s self-driving car challenge.

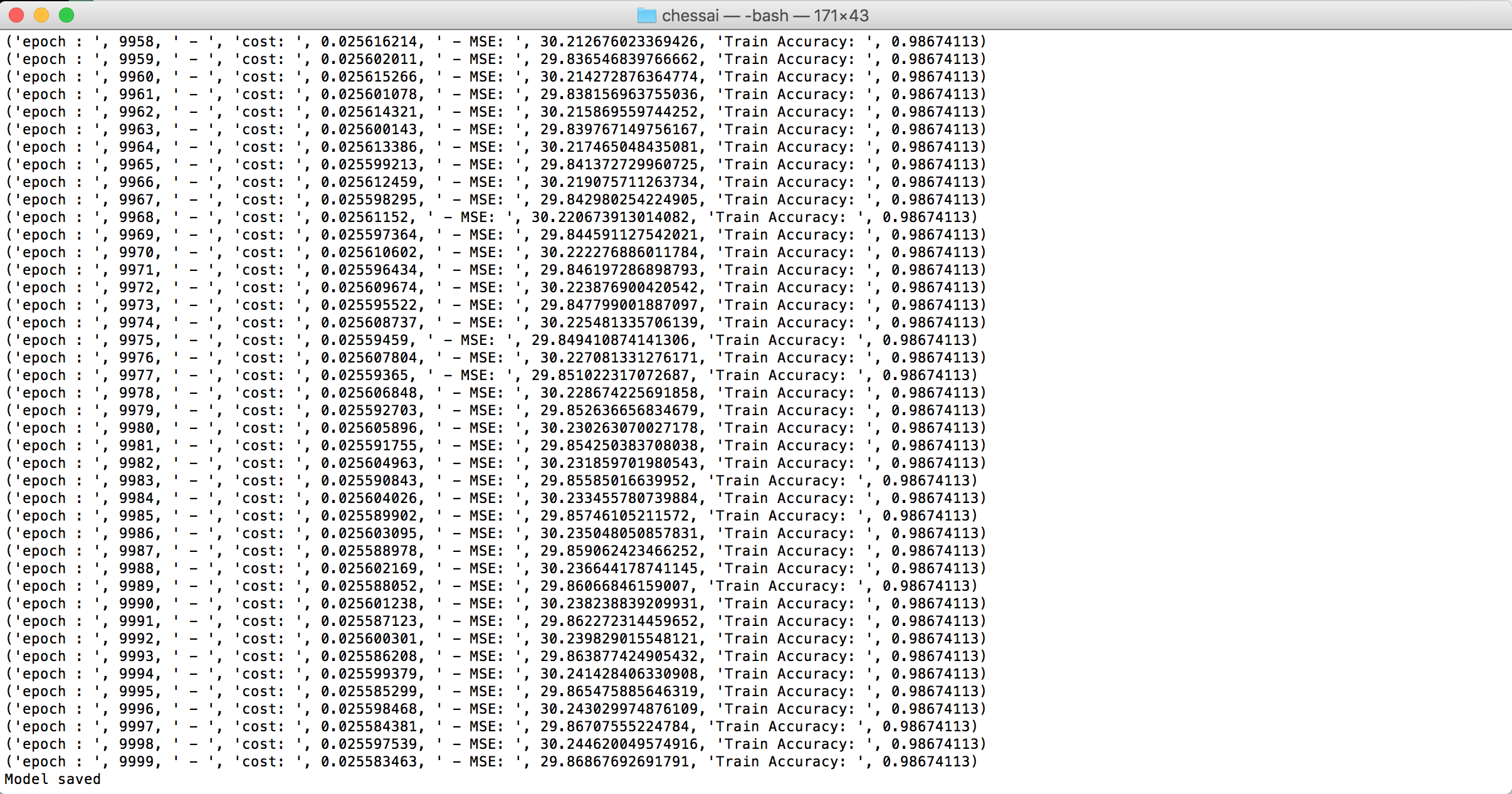

Step 4: Testing the model and iterating

Once the algorithm is generated, I should test to see at what level it can play chess.

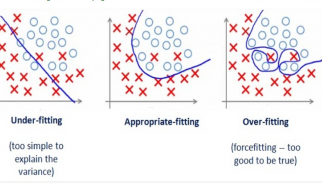

If the algorithm plays at a level far above Magnus, I can simplify and retrain my model, creating a more easily learned and executed evaluation algorithm.

If the algorithm plays at a level below Magnus, I?ll need to create a more sophisticated model, within the limits of what I?ll be able to learn and execute as a human.

During this step, I?ll finally find out if Max Chess has any merit whatsoever.

Now that I have a clear plan laid out, I?ll get started on Step 1 tomorrow.

Yesterday, I laid out my four-step plan for building a human-executable chess algorithm.

Today, I started working on the first step: Creating the dataset.

In particular, I need to take the large corpus of chess games in Portable Game Notation (PGN) and programmatically analyze them and convert them into the correct form, featuring a modified bitboard input and a numerical evaluation output.

Luckily, there?s an amazing library on Github called Python Chess that makes this a lot easier.

As explained on Github, Python Chess is ?a pure Python chess library with move generation and validation, PGN parsing and writing, Polyglot opening book reading, Gaviota tablebase probing, Syzygy tablebase probing and UCI engine communication?.

The parts I?m particular interested in are?

- PGN parsing, which allows my Python program to read any chess game in the PGN format.

- UCI (Universal Chess Interface) engine communication, which lets me directly interface with and leverage the power of the Stockfish chess engine for analysis purposes.

The documentation for Python Chess is also extremely good and filled with plenty of examples.

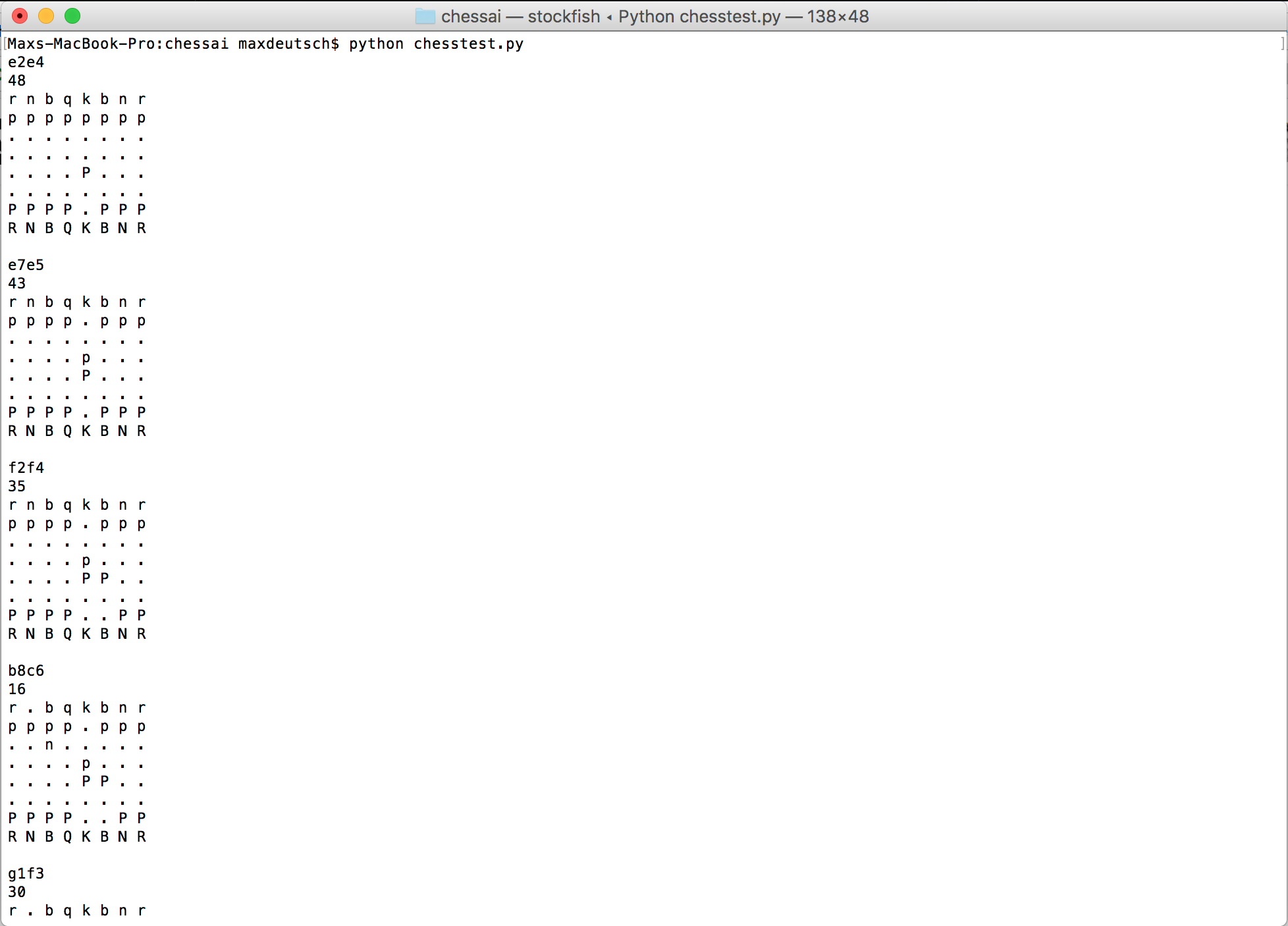

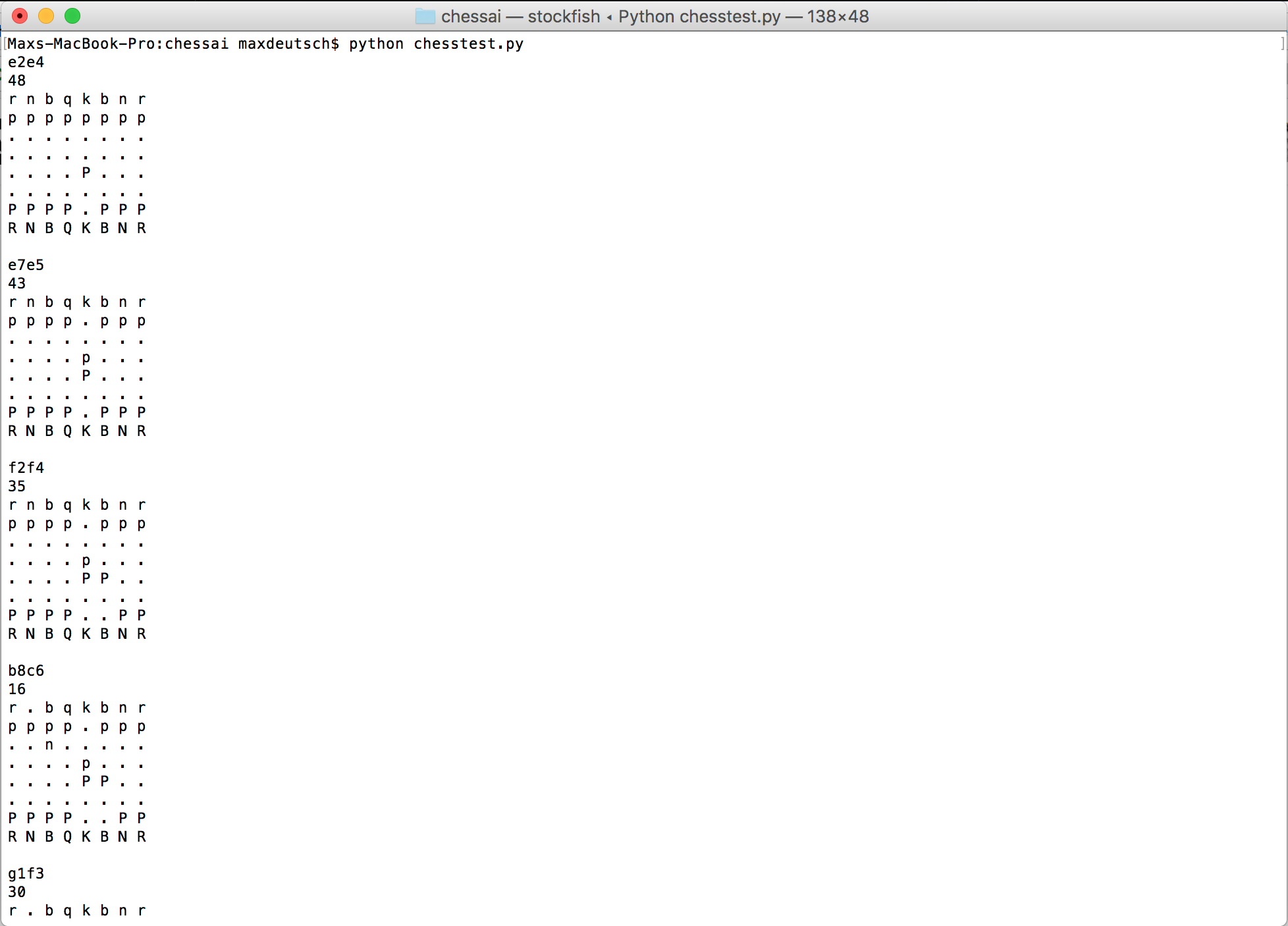

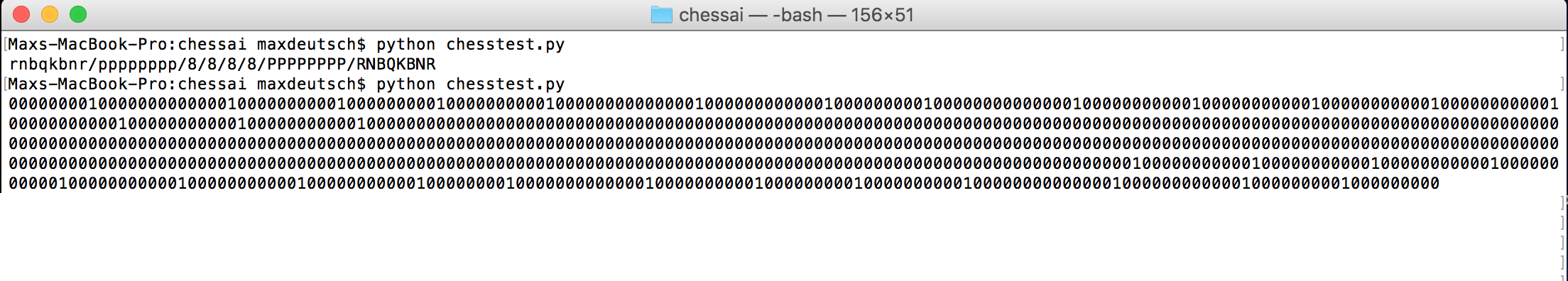

Today, using the Python Chess library, I was able to write a small program that can 1. Parse a PGN chess game, 2. Numerically evaluate each position, and 3. Print out each move, a visualization of the board after each move, and the evaluation (in centipawns) corresponding to each board position.

import chessimport chess.pgnimport chess.uciboard = chess.Board()pgn = open(“data/game1.pgn”)game = chess.pgn.read_game(pgn)engine = chess.uci.popen_engine(“stockfish”)engine.uci()info_handler = chess.uci.InfoHandler()engine.info_handlers.append(info_handler)for move in game.main_line():engine.position(board) engine.go(movetime=2000) evaluation = info_handler.info[“score”][1].cpif not board.turn: evaluation *= -1 print move print evaluation board.push_uci(move.uci()) print board

Now, I just need to convert this data into the correct form, so it can be used by my deep learning model (that I?ve yet to build) for the purposes of training / generating the algorithm.

I?m making progress?

Today, I didn?t have time to write any more chess code. So, for today?s post, I figured I?d share the other part of my preparations: The actually games.

Of course, my approach this month has a mostly all or nothing flavor (either I can effectively execute a very strong chess algorithm in my brain, or I can?t), but I?m still playing real chess games on the side to improve my ability to implement this algorithm.

In particular, I?ve been practicing 1. Picking the right moves to evaluate and 2. Playing the right moves without the algorithm when I?m 100% confident.

Recently though, I?ve also been practicing my attacking abilities. In other words, I?ve been trying to aggressively checkmate my opponent?s King during the middle game (compared to my typical approach of exchanging pieces and trying to win in the end game).

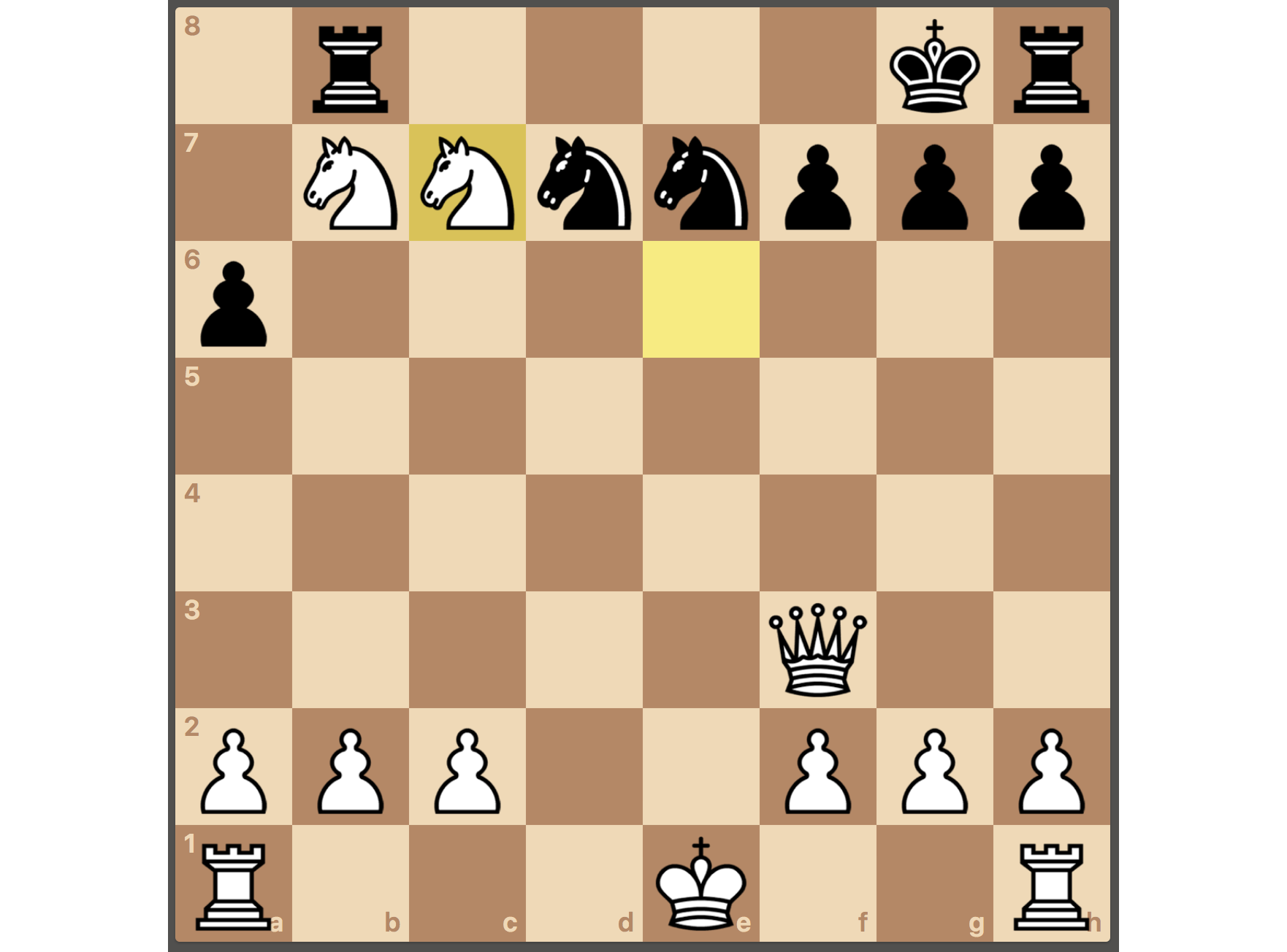

Here are three games from today or yesterday that demonstrate my newly aggressive approach. To watch the sped-up playback of the games, click on the picture of the chessboard of the game you want to watch and then click the play button.

Game 1 ? I play White and get Black?s Queen on move 15.

Game 2 ? I play Black and checkmate White in 12 moves

Game 3 ? I play White and checkmate Black in 26 moves

These games aren?t perfect. I still make a few major mistakes per game, and so do my opponents.

In fact, this is one of the biggest challenges with Chess.com: I?m only matched with real people of a similar skill level, which isn?t optimally conducive to improving.

But, I?m still able to test out my new ideas in this environment, while relying on the algorithmic part of my approach for the larger-scale improvements.

Tomorrow, I should hopefully have some time to make more algorithmic progress?

I?m just about to get on a plane from San Francisco to New York, on which I?ll have plenty of time to make progress on my chess algorithm.

Normally, I?d want to actually do the thing and then report back in my post for the day, but, since my flight gets in late, I?ll instead share my plan for what I?m going to do beforehand?

Two days ago, I made a little chess program that can read an inputted chess game and output the numerical evaluation for each move.

Today, during the flight, I?m going to modify this program, so that, instead of outputting a numerical value, it either outputs ?good move? or ?bad move?.

In other words, I need to determine the threshold between good and bad, and then implement this thresholding into my program.

I will attempt to replicate the way that Chess.com implements their threshold between ?good? moves and ?inaccuracies?.

I?ve noticed that, in general, a move is considered to be an inaccuracy if it negatively impacts the evaluation of the game by more than 0.3 centipawns, but I?ve also noticed that this heuristic changes considerably depending on the absolute size of the evaluation (i.e. Inaccuracies are measured differently if the evaluation is +0.75 versus +15, for example).

So, I will need to go through my past Chess.com games, and try to reverse engineer how they make these calculations.

Once I do so, I can implement this thresholding into my program.

For now, as I wait for the plane, I?ve implemented the naive version of this thresholding, where inaccuracies are described by a fixed difference of 0.3.

import chessimport chess.pgnimport chess.uciboard = chess.Board()pgn = open(“data/rickymerritt1_vs_maxdeutsch_2017-10-14_analysis.pgn”)test_game = chess.pgn.read_game(pgn)engine = chess.uci.popen_engine(“stockfish”)engine.uci()info_handler = chess.uci.InfoHandler()engine.info_handlers.append(info_handler)prev_eval = 0diff = 0for move in test_game.main_line():print moveengine.position(board) engine.go(movetime=2000) evaluation = info_handler.info[“score”][1].cpif board.turn: if prev_eval – evaluation > 0.3: print “bad move” else: print “good move” if not board.turn: evaluation *= -1 if evaluation – prev_eval > 0.3: print “bad move” else: print “good move” prev_eval = evaluation board.push_uci(move.uci()) print board

Yesterday, on the plane, I had a really interesting idea: Could a room full of completely amateur chess players defeat the world?s best chess player after less than one hour of collective training?

I think the answer is yes, and, in fact, they could use a distributed version of my human algorithmic approach (i.e. Max Chess) to do so.

In other words, rather than just me learning and computing ever single chess evaluation on my own, instead, each amateur chess player could be in charge of a single mathematical operation, which they could each learn in a few minutes.

By computing mostly in parallel, the entire room of amateur players could evaluate an entire chess board in theoretically a few minutes, letting them not only play at a high level, but also make moves in a reasonable amount of time.

There have been past attempts of Wisdom of the Crowds-style chess games, where thousands of chess players are pitted against a single grandmaster. In these games, each member of the crowd recommends a move and the most popular move is played, while the grandmaster plays as normal.

In the most popular of these games, Garry Kasparov, the grandmaster, defeated a crowd of over 50,000. In other words, the crowd wasn?t so wise.

However, using the distributed algorithmic approach, the crowd would collectively play at the level of an incredible power chess computer. In fact, unlike in my case, the evaluation algorithm can remain reasonably sophisticated, since the computations aren?t restricted by the limits of a single human brain.

Using this approach, we (the amateur chess players of the world) could potentially stage the first crowd vs. grandmaster game where the crowd convincingly defeats a grandmaster, and in style.

To be clear, this doesn?t mean that I?m giving up on my one-man-band approach. I?m still trying to do this completely on my own.

However, I do think this method would scale wonderfully to a larger crowd of people, and if done, the chess game could be played at a speed that may actually be interesting to outside spectators (where as, in my game, I?ll be staring into space for dozens of minutes at a time, mentally calculating).

Maybe, after I complete this month?s challenge, I?ll try to organize a crowd-style algorithmically-inspired chess match.

Today, I went into Manhattan, and, while I was there, I stopped by Bryant Park to play a few games against the chess hustlers.

I wanted to test out my chess skills in the wild, especially since I need more practice playing over an actual chess board.

Apparently, according to chess forums, etc., many players who practice exclusively online (on a digital board) struggle to play as effectively in the real-world (on a physical board). This mostly has to do with how the boards are visualized:

A digital board looks like this?

While a physical board looks like this?

I?m definitely getting used to the digital board, so today?s games in the park were a nice change.

I played three games against three different opponents, with 5 minutes on the clock for each. In all three cases, I was beaten handily. These guys were good.

After the games, now with extra motivation to improve my chess skills, I found a cafe and spent a few minutes working more on my chess algorithm.

In particular, I quickly wrote up the functions needed to convert the PGN chessboard representations into the desired bitboard representation.

def convertLetterToNumber(letter): if letter == ‘K’: return ‘100000000000’ if letter == ‘Q’: return ‘010000000000’ if letter == ‘R’: return ‘001000000000’ if letter == ‘B’: return ‘000100000000’ if letter == ‘N’: return ‘000010000000’ if letter == ‘P’: return ‘000001000000’ if letter == ‘k’: return ‘000000100000’ if letter == ‘q’: return ‘000000010000’ if letter == ‘r’: return ‘000000001000’ if letter == ‘b’: return ‘000000000100’ if letter == ‘n’: return ‘000000000010’ if letter == ‘p’: return ‘000000000001’ if letter == ‘1’: return ‘000000000000’ if letter == ‘2’: return ‘000000000000000000000000’ if letter == ‘3’: return ‘000000000000000000000000000000000000’ if letter == ‘4’: return ‘000000000000000000000000000000000000000000000000’ if letter == ‘5’: return ‘000000000000000000000000000000000000000000000000000000000000’ if letter == ‘6’: return ‘000000000000000000000000000000000000000000000000000000000000000000000000’ if letter == ‘7’: return ‘000000000000000000000000000000000000000000000000000000000000000000000000000000000000’ if letter == ‘8’: return ‘000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000’ if letter == ‘/’: return ”def convertToBB(board): bitBoard = ” board = str(board.fen()).split(‘ ‘)[0] for letter in board: bitBoard = bitBoard + convertLetterToNumber(letter) return bitBoardprint convertToBB(board)

While I?m making some progress on the algorithm, since I?ve been in New York, I haven?t made quite as much progress as I hoped, only spending a few minutes here and there on it.

Let?s see if I can set aside a reasonable chunk of time tomorrow to make some substantial progress?

Today, I turned a corner in my chess development: I?m finally starting to appreciate the beauty in the game, which is fueling my desire to improve my chess game in the traditional way, in addition to the algorithmic way.

Before starting this month?s challenge, I was a bit concerned about studying chess. In particular, I imagined that learning chess was just memorizing predetermined sequences of moves, and was worried that, by simply converting chess to memorized patterns, the game would lose some of its interest to me.

In the past, while playing chess with friends, I?ve enjoyed my reliance on real-time cleverness and intuition, not pattern recognition. I feared I would lose this part of my game, as I learned more theory and began to play more like a well-trained computer.

Ironically, this fear came true, but not in the way that I expected. After all, my main training approach this month (my algorithmic approach) effectively removes all of the cleverness and intuition from the gameplay, and instead, treats chess like one big, boring computation.

This computational approach is still thrilling to me though, not because it makes the actual game more fun, but because it represents an entirely new method of playing chess.

Interestingly though, adding to my chess knowledge in the normal way hasn?t reduced my intellectual pleasure in the game as I expected. In fact, it has increased it.

The more I see and understand, the more I can appreciate the beauty of particular chess lines or combinations of moves.

Sure, there are certain parts of my game that are now more mechanical, but this allows me to explore new intellectual curiosities and combinations at higher levels. It seems there is always a higher level to explore (especially since my chess rating is around 1200, while Magnus is at 2822 and computers are at around 3100).

The more normal chess I?ve learned this month, the more I?m drawn to pursuing the traditional approach. There is just something intellectually beautiful about the game and the potential to make ever-continuing progress, and I?d love to explore this world further.

With that said, this isn?t a world that can fully be explored in one month, so I?m happily continuing with primary focus on my algorithmic approach.

To be clear, I?m not pitting the algorithmic approach against the traditional approach. Instead, I?m saying: ?Early in this month, I fell in love with the mathematical beauty of and the potential to break new ground with the algorithmic approach. Just today, I?ve finally found a similar beauty in the way that chess is traditionally played and learned, and also want to explore this further?.

In other words, this month, I?m developing two new fascinations that I can enjoy, appreciate, and explore for the rest of my life.

This is the great thing about the M2M project: It has continually exposed me to new, personally-satisfying pursuits that I can continue to enjoy forever, even once the particular month ends.

Today, my brain suddenly identified traditional chess as one of these lifelong pursuits.

If I had this appreciation for traditional chess at the beginning of the month, I wonder if I would have so easily and quickly resorted to the algorithmic approach, or if I would have been held back by my romanticism for the ?normal way?.

Luckily, this wasn?t the case, and now I get to explore both in parallel.

Today, I finished writing the small Python script that converts chess games downloaded from the internet into properly formatted data needed to train my machine learning model.

Thus, today, it was time to start building out the machine learning model itself.

Rather than starting from scratch, I instead looked for an already coded-up model on Github. In particular, I needed to find a model that analogizes reasonably well to chess.

I didn?t have to look very hard: The machine learning version of ?Hello World? is called MNIST, and it works perfectly for my purposes.

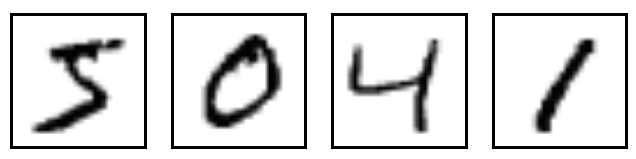

MNIST is a dataset that consists of 28 x 28px images of handwritten digits like these:

The dataset also includes ten labels, indicating which digit is represented in each image (i.e. the labels for the above images would be 5, 0, 4, 1).

The objective is to craft a model that, given a collection of 28 x 28=784 values, can accurately predict the correct numerical digit.

In a very similar way, the objective of my chess model, given a collection of 8 x 8 = 64 values (where each value is represented using 12-digit one-hot encoding), is to accurately predict whether the chess move is a good move or a bad move.

So, all I need to do is download some example code from Github, modify it for my purposes, and let it run. Of course, there are still complexities with this approach (i.e. getting the data in the right format, optimizing the model for my purposes, etc.), but I should be able to use already-existing code as a solid foundation.

Here?s the code I found:

from __future__ import absolute_importfrom __future__ import divisionfrom __future__ import print_functionimport argparseimport sysfrom tensorflow.examples.tutorials.mnist import input_dataimport tensorflow as tfFLAGS = Nonedef main(_): # Import data mnist = input_data.read_data_sets(FLAGS.data_dir, one_hot=True)# Create the model x = tf.placeholder(tf.float32, [None, 784]) W = tf.Variable(tf.zeros([784, 10])) b = tf.Variable(tf.zeros([10])) y = tf.matmul(x, W) + b# Define loss and optimizer y_ = tf.placeholder(tf.float32, [None, 10])# The raw formulation of cross-entropy, cross_entropy = tf.reduce_mean( tf.nn.softmax_cross_entropy_with_logits(labels=y_, logits=y)) train_step = tf.train.GradientDescentOptimizer(0.5).minimize(cross_entropy)sess = tf.InteractiveSession() tf.global_variables_initializer().run() # Train for _ in range(1000): batch_xs, batch_ys = mnist.train.next_batch(100) sess.run(train_step, feed_dict={x: batch_xs, y_: batch_ys})# Test trained model correct_prediction = tf.equal(tf.argmax(y, 1), tf.argmax(y_, 1)) accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32)) print(sess.run(accuracy, feed_dict={x: mnist.test.images, y_: mnist.test.labels}))if __name__ == ‘__main__’: parser = argparse.ArgumentParser() parser.add_argument(‘–data_dir’, type=str, default=’/tmp/tensorflow/mnist/input_data’, help=’Directory for storing input data’) FLAGS, unparsed = parser.parse_known_args() tf.app.run(main=main, argv=[sys.argv[0]] + unparsed)

Tomorrow, I?ll take a crack at modifying this code, and see if I can get anything working.

Parkinson?s Law states that ?work expands so as to fill the time available for its completion?, and I?m definitely experiencing this phenomenon during this final M2M challenge.

In particular, at the beginning of the month, I decided to extend this challenge into early November, rather than keeping it contained within a single month. I did this for a good reason, which I?ll explain soon, but this extra time isn?t exactly helping me.

Instead, I?ve simply adjusted my pace as to fit the work to the extended timeline.

As a result, in the past week, especially since I?m currently visiting family, I?ve found it challenging to make focused progress for more than a few minutes each day.

So, in order to combat this slowing pace and start building momentum, I?ve decided to set an interim deadline: By Sunday, October 29, I must finish creating my chess algorithm and shift my focus fully to learning and practicing the algorithm.

This gives me one week to 1. Build the machine learning model, 2. Finish creating the full dataset, 3. Testing the trained model, 4. Making further optimizations, 5. Testing the strength of the chess algorithm, and, in general, 6. Validating or invalidating the approach.

Hopefully, with this interim deadline in place, I feel a greater sense of urgency and can overcome the friction of Parkinson?s Law.

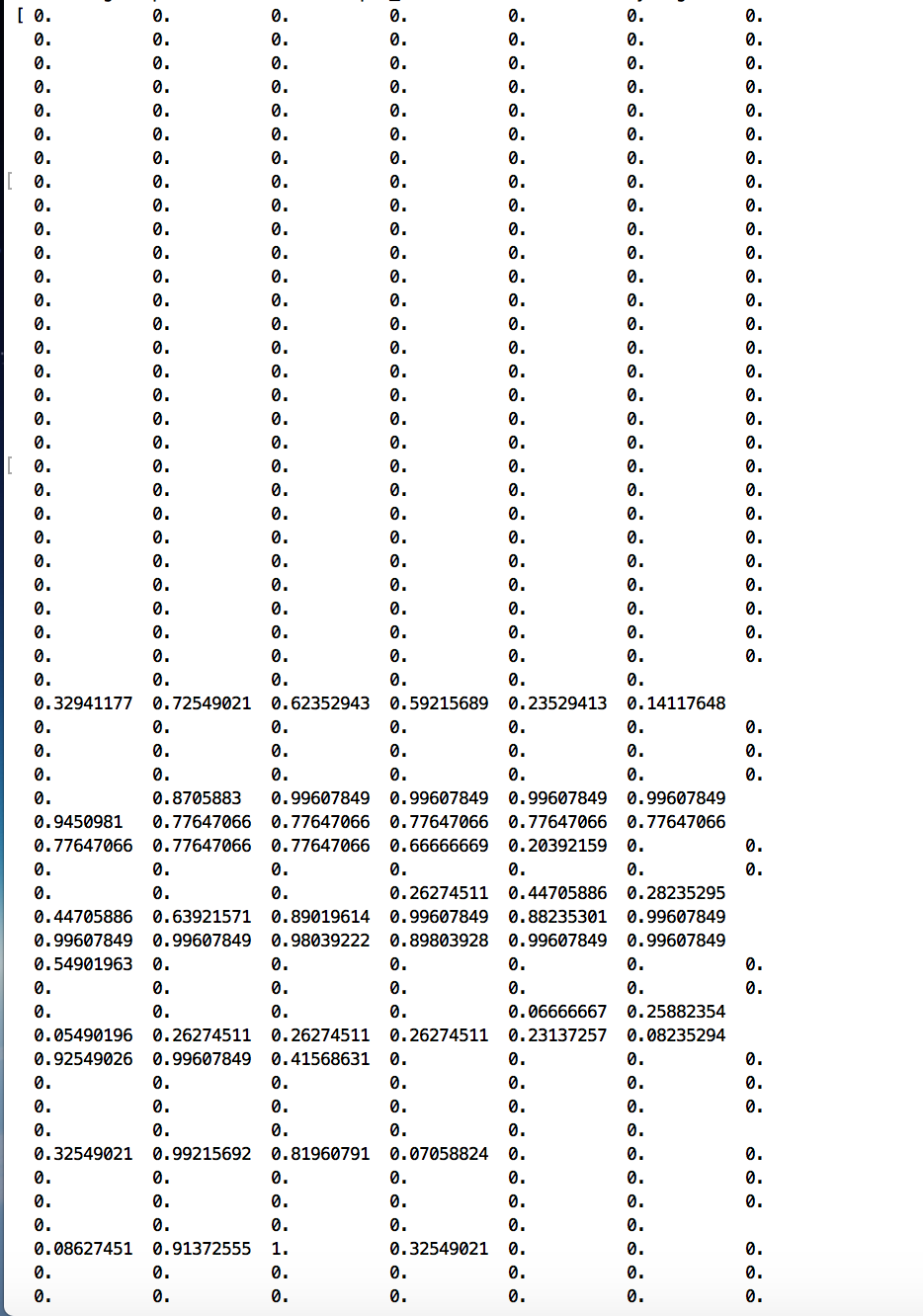

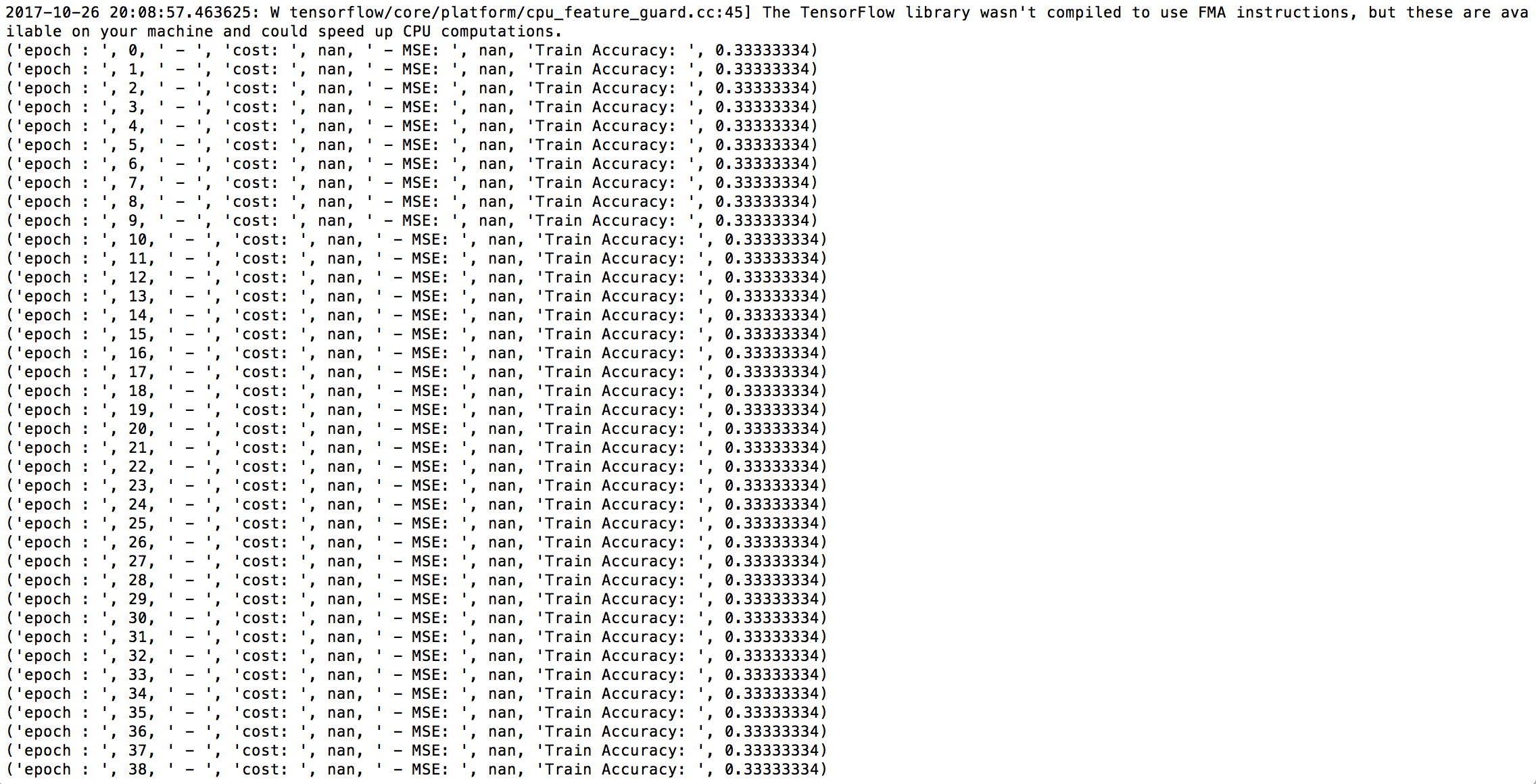

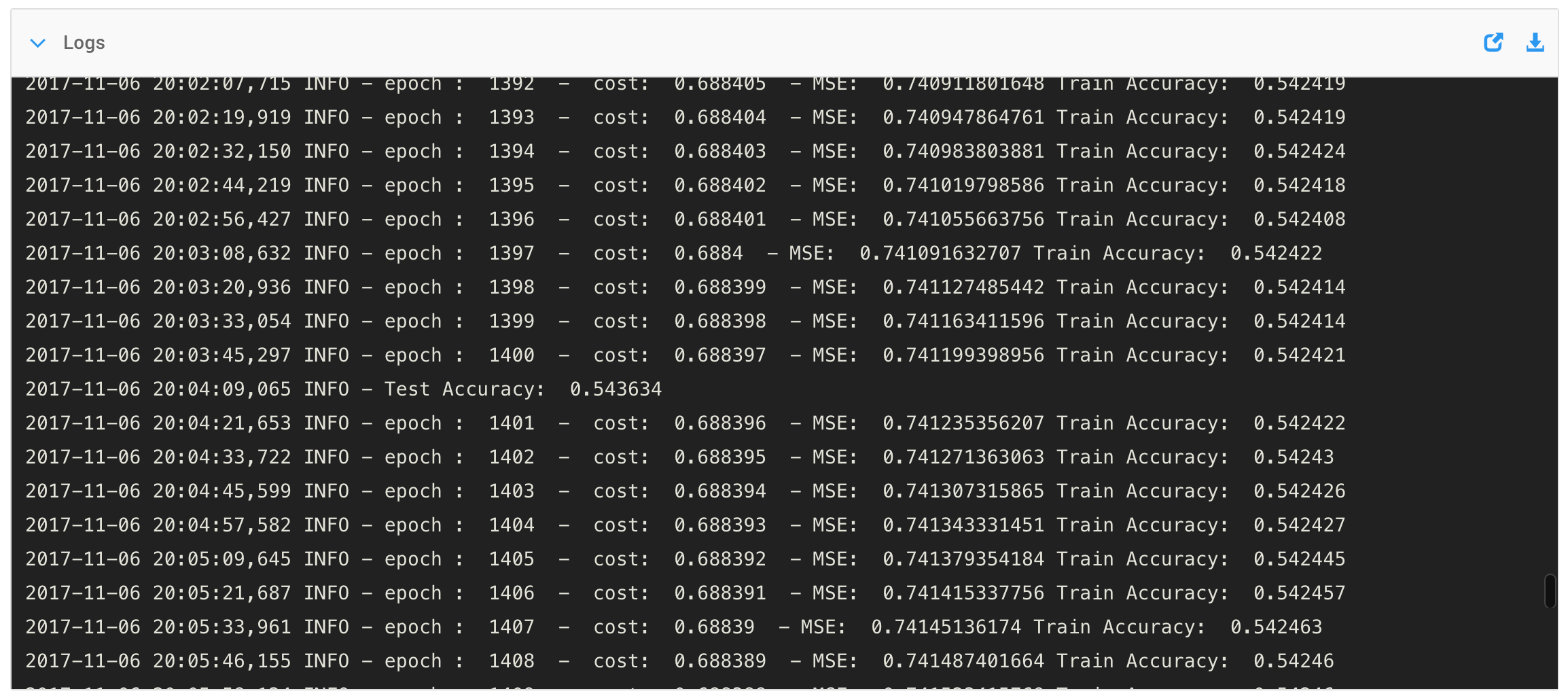

Two days ago, I found some code on Github that I should be able to modify to create my chess algorithm. Today, I will dissect the code line by line to ensure I fully understand it, preparing me to create the best plan for moving forward.