Don?t worry, it?s simpler than it sounds

Photo by Harry Sandhu on Unsplash

Photo by Harry Sandhu on Unsplash

If you?re new to computer science, you?ve probably seen a notation that looks something like O(n) or O(log n). That?s time complexity analysis or big-O notation!

It?s a super important concept to understand, at least on an intuitive level, in order to write fast code. There?s also space complexity. It defines how much memory a program might use but we?ll leave that for the next article.

This concept is one of the reasons higher education thinks it needs to make computer science undergrads take years of math classes. Now, I have nothing against math classes, but you definitely don?t need them to write good code.

The reality is that all the computer science concepts the average programmer needs to know are not hard to understand on their own. Much like code itself, the parts are simple, but these parts add up to something unfathomably complex.

What Is n?

?Time complexity is the computational complexity that describes the amount of time it takes to run an algorithm.? ? Wikipedia

That?s a pretty wordy definition so let?s break it down.

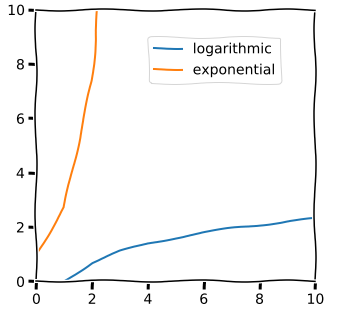

I?ll go over four important categories of big-O notation. There are others like n log n and n factorial but we?ll leave those out for now.

Let?s say we have a list of five letters:

[a,b,c,d,e]

Since there are five of them, n here would be equal to 5: n=5.

Constant time or O(1)

If your program wants to remove one letter from your list like this:

[a,b,c,d,e] => [a,b,c,d]

Its time complexity is simply 1 because it doesn?t matter how many letters are in the list, it will always take just one operation.

O(1) is the best possible time complexity! Data structures like hash tables make clever use of algorithms to pull off constant time operations and speed things up dramatically.

Linear time or O(n)

If your program does something like duplicate all the letters like so:

[a,b,c,d,e] => [aa,bb,cc,dd,ee]

For each letter, the letter is duplicated. The program goes through one at a time.

You could say that its runtime is Order n because the number of operations it has to do is proportional to how many letters are in your list. Does that say anything about how fast it will run in practice? Not really.

Your input could be two letters or it could be twelve billion. It could be running on an old laptop or a super computer and take a very different amount of time to run.

If the program is adding each number to itself three times instead of two, it will still be O(n) because even though your program is doing more operations per input, how much more is constant per input.

O(n) is a decent time complexity and you often can?t avoid it.

Order n squared or O(n)

Now, say you want your program to add every member of the list to each other.

[a,b,c,d,e] => [abcde, bacde, cabde, dabce, eabcd]

Since for each item in the list you have to also go through the whole rest of the list, the number of operations your program has to do is the number of inputs (or n) times itself (n squared).

If your list has two letters in it, your program will take four operations to run. If your list has four trillion letters, it may never finish running!

O(n) is almost never an acceptable time complexity and you can usually avoid it with a clever algorithm of some kind.

Logarithmic time or O(log n)

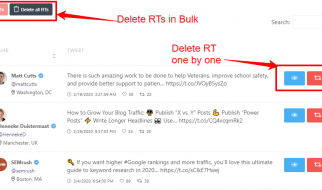

matplotlib xkcd style graphs!

matplotlib xkcd style graphs!

Looking at this figure, you can see how each of the four runtimes scale.

The orange quadratic spikes up super fast while the green constant stays the same no matter the input. But what about that blue logarithmic line? That looks almost as good as constant time!

Logarithms

?A quantity representing the power to which a fixed number (the base) must be raised to produce a given number.?? Lexico

If you haven?t thought about algebra since high school, you?ll want a refresher on just what the heck a logarithm is.

Here comes the math

Logarithms are used to solve for x when x is in the exponent. Look at this equation:

3^x == 9

The question logarithms answer is: ?What power do we raise 3 to to get 9?? The answer, of course, is 2!

log3(9) == 2

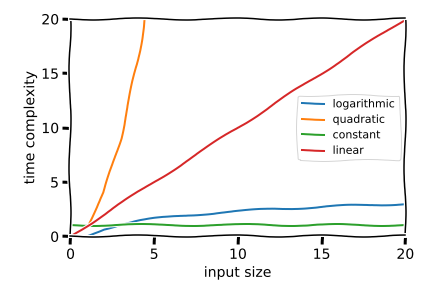

A logarithmic function is the opposite of an exponential function. When you say something grows exponentially, it?s being multiplied. When something grows logarithmically, it is being divided.

Look! They?re mirrored.

Look! They?re mirrored.

That means that if you have two list items to go through and your program runs in log base 2 of n time, it will take one operation to execute. That?s really powerful! It will take fewer operations to run than you have data.

The bigger the input, the smaller proportion of the actual input your program has to go through!

Logarithms in Practice

Looking at my list again:

[a,b,c,d,e]

If I want to search through it and find d, for example, maybe you?ll write a program that looks at each letter in order and returns the index (3) when it finds what it is looking for (d).

That search will take four operations. Not too bad, right? What if we were looking for e instead? Only five operations. Since that is the worst-case scenario and five is the length of the input, that search algorithm will be O(n).

It?s fine for a small list, but if we were searching a list with a billion elements for the element at the very end, this algorithm would take a long time.

Flamenco search

What if we could speed this up to O(log n)? Well, if the list is sorted, we can! I?ll let these flamenco dancers explain it:

Notice how the dancers are in a line with numbers on their backs. The man with the number seven on his back is looking for the woman who matches. He doesn?t know where she is but he knows all the ladies are in sorted order.

His process is to go to the dancer in the middle and ask her which side of her number seven is on. When she says: ?She?s to the left of me,? he can rule out everyone to her right.

Next, he asks the woman in the middle of the left side the same question. This lets him rule out another half of the candidates and so on until he finds number seven.

This program runs in O(log n) time because in the worst-case scenario, the number of operations it takes to run will be log base 2 of the input size. In this case, since there are seven dancers in our ?list?, the runtime is log2(7) or ~3 operations.

This algorithm is called a binary search because you narrow the search by two every operation. It?s the poster child for O(log n).

Thanks for Reading!

Hope that makes sense! If something is unclear, please leave a comment.