Bayesian information criterion (BIC) is a criterion for model selection among a finite set of models. It is based, in part, on the likelihood function, and it is closely related to Akaike information criterion (AIC).

When fitting models, it is possible to increase the likelihood by adding parameters, but doing so may result in overfitting. The BIC resolves this problem by introducing a penalty term for the number of parameters in the model. The penalty term is larger in BIC than in AIC.

BIC has been widely used for model identification in time series and linear regression. It can, however, be applied quite widely to any set of maximum likelihood-based models.

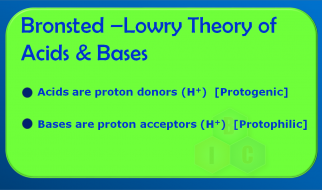

Mathematical Expression:

Mathematically BIC can be defined as-

![]() Bayesian Information Criterion formula

Bayesian Information Criterion formula

Application & Interpretation:

The models can be tested using corresponding BIC values. Lower BIC value indicates lower penalty terms hence a better model.

Read also AIC statistics.

Though these two measures are derived from a different perspective, they are closely related. Apparently, the only difference is BIC considers the number of observations in the formula, which AIC does not.

Though BIC is always higher than AIC, lower the value of these two measures, better the model.

Practice Dataset:

Visit our Data science and analytics platform, Analyttica TreasureHunt to practice on real datasets.

Also, read the following:

Concordance Check.

Kernel Filter.

k-Means Clustering.