The simplest neural network (threshold neuron) lacks the capability of learning, which is its major drawback. In the book ?The Organisation of Behaviour?, Donald O. Hebb proposed a mechanism to update weights between neurons in a neural network. This method of weight updation enabled neurons to learn and was named as Hebbian Learning.

Three major points were stated as a part of this learning mechanism :

- Information is stored in the connections between neurons in neural networks, in the form of weights.

- Weight change between neurons is proportional to the product of activation values for neurons.

![]()

- As learning takes place, simultaneous or repeated activation of weakly connected neurons incrementally changes the strength and pattern of weights, leading to stronger connections.

DDI Editor’s Pick: 5 Machine Learning Books That Turn You from Novice to Expert – Data Driven?

The booming growth in the Machine Learning industry has brought renewed interest in people about Artificial?

www.datadriveninvestor.com

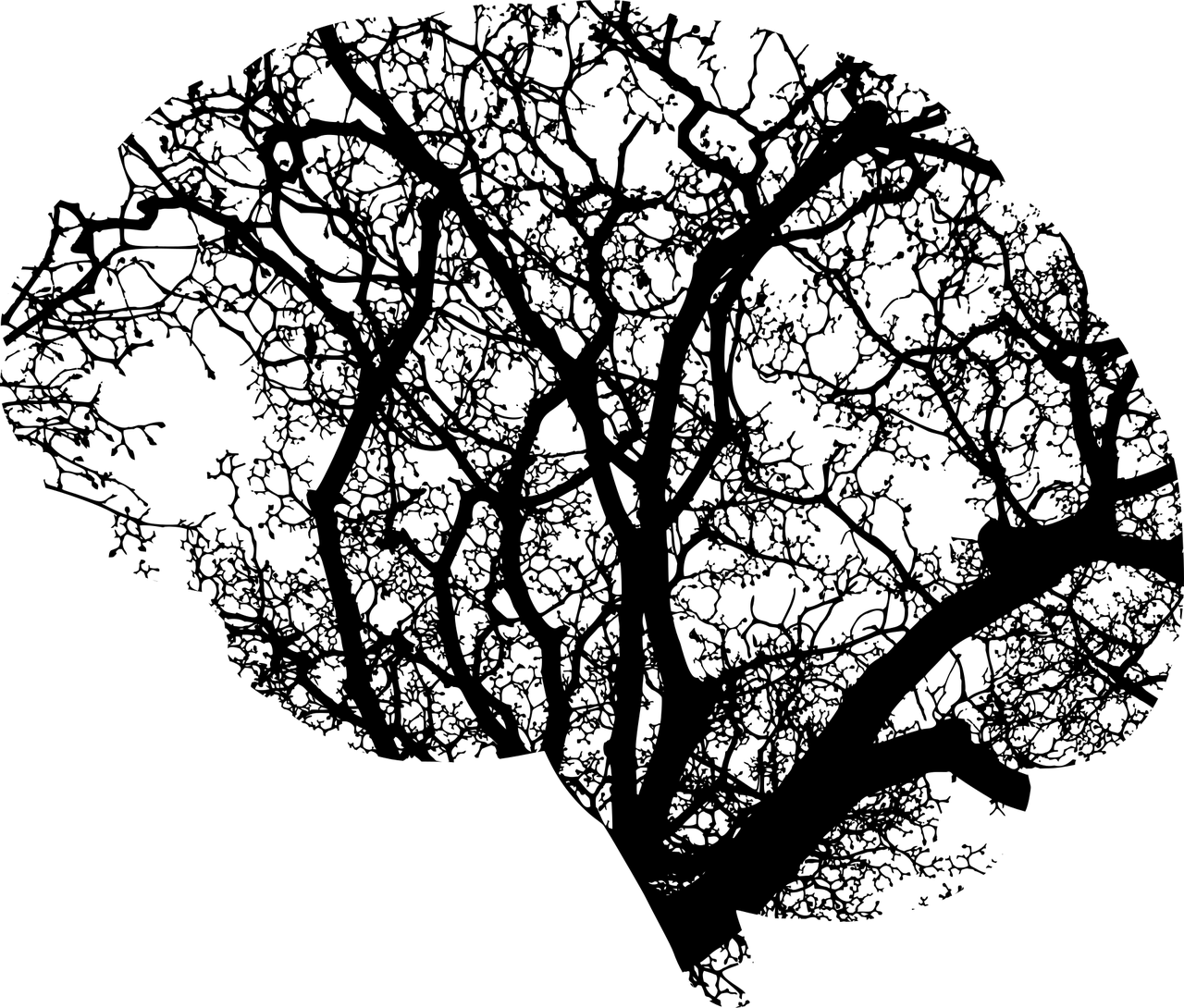

Neuron Assembly Theory

The repeated stimulus of weak connections between neurons leads to their incremental strengthening.

The new weights are calculated by the equation :

![]()

![]()

Inhibitory Connections

This is another kind of connection, that have an opposite response to a stimulus. Here, the connection strength decreases with repeated or simultaneous stimuli.

![]()

For detailed information on such neural networks, one should consider reading this.

Implementation of Hebbian Learning in a Perceptron

Frank Rosenblatt in 1950, inferred that threshold neuron cannot be used for modeling cognition as it cannot learn or adopt from the environment or develop capabilities for classification, recognition or similar capabilities.

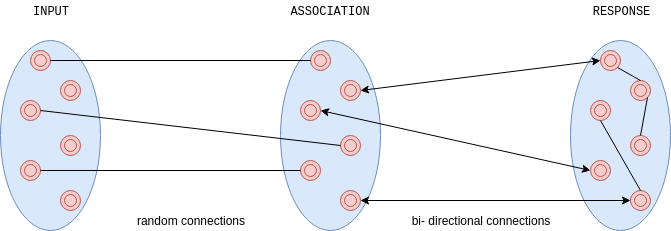

A perceptron draws inspiration from a biological visual neural model with three layers illustrated as follows :

- Input Layer is synonymous to sensory cells in the retina, with random connections to neurons of the succeeding layer.

- Association layers have threshold neurons with bi-directional connections to the response layer.

- Response layer has threshold neurons that are interconnected with each other for competitive inhibitory signaling.

Response layer neurons compete with each other by sending inhibitory signals to produce output. Threshold functions are set at the origin for the association and response layers. This forms the basis of learning between these layers. The goal of the perception is to activate correct response neurons for each input pattern.

Conclusion

Hebbian Learning is inspired by the biological neural weight adjustment mechanism. It describes the method to convert a neuron an inability to learn and enables it to develop cognition with response to external stimuli. These concepts are still the basis for neural learning today.