Recently during work i started facing weird problem, server became slow , connection to other server were intermittent. lot of different kinds of logs which made me scratched my head. During log analysis i found one error message was interesting for me.

?Too Many Open file? , What too many open file ? , in my application i was not creating or accessing any file. then why weblogic complaining about too many open file ?

After Quick Google Search, i realized in linux , Socket connections are treated as files . so any socket connection created by any linux process will be consider one open file. OK As my weblogic java process create lot of socket connection ,it met the maximum limit of open files which a process can open.

How to check and verify that, How to find out max open file limit. Again lot of researched helped me to get answer of all these question.

How to find max open file limit

Linux has default limit of resource usages by a process , so process does not eat-up all resources of system and destabilized the system. These limit can be obtained using ulimit command.

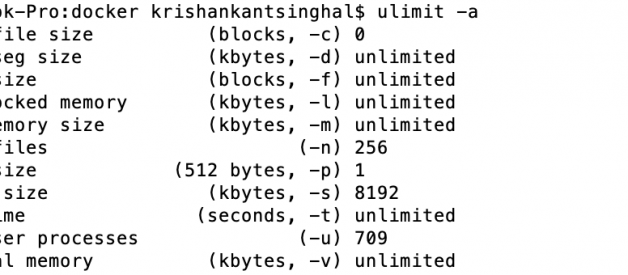

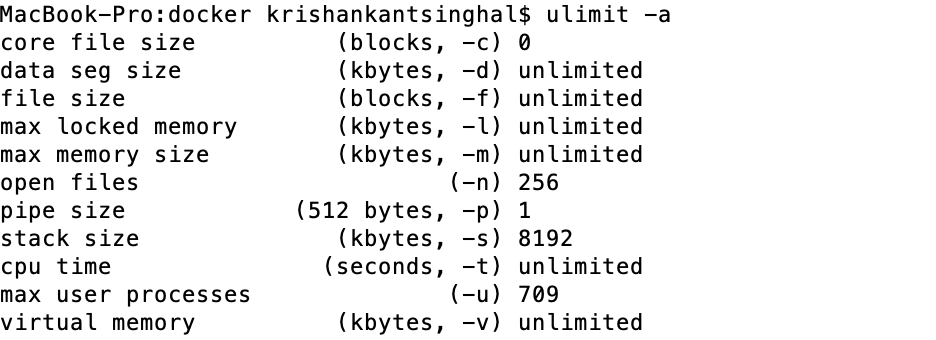

So on linux terminal , if we type ulimit -a , it will provide all resource constraint. for example on my mac when i run this command i get below output.

if we see open files from above image, it show 256, so default no of file connection( file opened by process + socket connection ) can reach to 256. this limit can be checked by ulimit -n command also. ulimit further divided into soft limit and hard limit.

Soft Limit :- Soft limit means process will be allowed to go beyond this limit but it is warning that you are exceeding your resource consumption. and you will meet your hard limit soon.

Soft limit can be checked using ulimit -S -n , here S is for soft and n for file connection limit.

HardLimit :- Hard limit is a full stop for a process, Process will not be allowed to create more connection than this count. it can be checked using ulimit -H -n.

so In my case , On my Weblogic server, i was having 1024 as soft and 4096 as the hard limit ,My process was exceeding this limit (1024 plus file open by weblogic + 3000 plus open socket connection). and i was getting Too many open file error.

To fix this issue , We have to see why so many connection are getting created. but for a normal high load application creating 3?4K connections is not a big deal. so we have to increase the limit.

Which can be set using ulimit -n <open count> . Example ulimit -n 8096.But we have to remember one thing, ulimit -n command show default soft limit , but when we set this using ulimit -n <open count> it set hard and soft. so if your hard limit is 8096 and you did ulimit -n 4096 , it will set your hard and soft limit to 4096 for that linux session. so we have to be little careful.