Today I will look at a comparison between discriminative and generative models. I will be looking at the Naive Bayes classifier as the generative model and logistic regression as the discriminative model.

Before going into details I will briefly describe the two techniques.

Naive Bayes Classifier:

The Naive Bayes is linear classifier using Bayes Theorem and strong independence condition among features. Given a data set with n features represented by

![]()

Naive Bayes states the probability of output: Y from features F_i is,

![]()

This requires that the features F_i are conditionally independent. From Bayes Theorem:

![]()

Let us look at an example: You have a database of emails.

80% of the emails are spam:

75% of them have the word ?buy?, 40% of them have the word ?win?

20% of emails are not spam:

12% of them have the word ?buy?, 7% of them have the word ?win?

Given an email with the words ?buy? and ?win? we have to find out the probability that the email is a spam. Using Naive Bayes we will assume that the features ?win? and ?buy? are independent.

![]()

,

![]()

This becomes:

![]()

Logistic Regression:

Logistic regression is mainly used in cases where the output is boolean. Multi-class logistic regression can be used for outcomes with more than two values. Here we will delve into the boolean case. We consider features

![]()

and outcome Y which can take two values say {0,1}. The probability of the out is assumed to follow a parametric model given by the sigmoid function.

![]()

![]()

This gives us a simple linear expression for classification. We predict the outcome to be

![]()

if

![]()

.

This reduces to:

![]()

.

Logistic regression measures the relationship between a output variable Y (categorical) and one or more independent variables, which are usually (but not necessarily) continuous, by using probability scores as the predicted values of the dependent variable.

Generative vs Discriminative models:

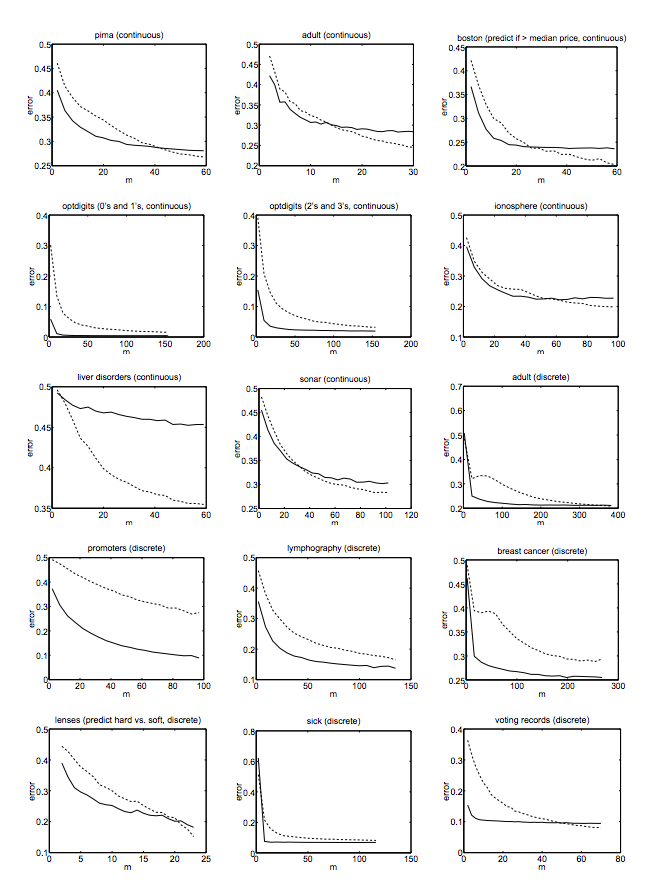

This paper by Professor Andrew Ng and Professor Michael I Jordan provides a mathematical proof of error properties of both models. They conclude that when the training size reaches infinity the discriminative model: logistic regression performs better than the generative model Naive Bayes. However the generative model reaches its asymptotic faster (O(log n)) than the discriminative model(O (n)), i.e the generative model (Naive Bayes) reaches the asymptotic solution for fewer training sets than the discriminative model (Logistic Regression). This behavior is best represented by the experiment conducted by Ng & Jordan where they did predictions for 15 datasets from the UCI machine learning repository. In some cases the training sample was not large enough for logistic regression to win.

This experiment was done for a pure logistic regression model. In reality regularization is added to force the model toward lower values of the parameters. This departs from pure discriminative behavior of the model, understanding the discriminative vs generative behavior of models leads us towards making better decisions in choosing hybrid models.

Naive Bayes also assumes that the features are conditionally independent. Real data sets are never perfectly independent but they can be close. In short Naive Bayes has a higher bias but lower variance compared to logistic regression. If the data set follows the bias then Naive Bayes will be a better classifier. Both Naive Bayes and Logistic regression are linear classifiers, Logistic Regression makes a prediction for the probability using a direct functional form where as Naive Bayes figures out how the data was generated given the results.

Originally published at deblivingdata.net.