Decimal Vs Float

- DECIMAL and FLOAT both are used to store numerical values.

- Float is Approximate-number data type, which means that not all values in the data type range can be represented exactly , it round up the values.

where as Decimal is Fixed-Precision data type, which means that all the values in the data type range can be represented exactly with precision and scale, it doesn?t round up the values.

- Syntax:

1) Decimal( Precision, Scale)

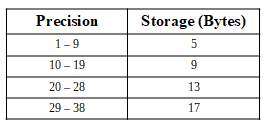

Here, Precision Means the total number of digits, both before and after the decimal point. The precision has a range from 1 to 38. The default precision is 38.

and Scale is the number of digits after the decimal point.Scale can only be specified if Precision is specified. The scale must always be less than or equal to the precision.

Storage considerations with Decimal Data Type:

2)Float(n)

In this case, the n indicates if the field will take up 4 or 8 bytes. The float data type supports values in these ranges -1.79E+308 to -2.23E-308, 0 and 2.23E-308 to 1.79E+308.

If the number of characters in the scientific notation of the value is between 1 to 24 the precision is 7 digits and the storage is 4 bytes. If the number of characters in the scientific notation of the value is between 25 to 53 the precision is 15 digits and the storage is 8 bytes.

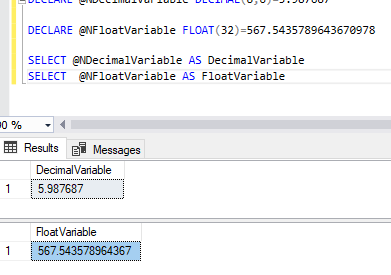

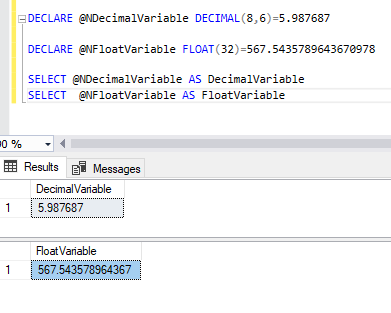

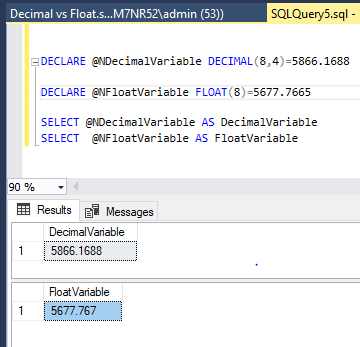

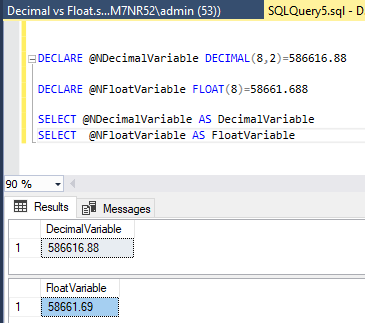

- Example:

- In SQL Server DECIMAL(8,4) and DECIMAL(8,2) are different data types. This means that 5866.1688 and 586616.88 are different types But in case of float FLOAT(8) is may be like this 5677.7665 or may be like this 58661.688 both are same data types.

- Float is a single precision (32 bit) floating point data type and decimal is a 128-bit floating point data type.

- Floating point data type represent number values with fractional parts.

- Decimal accurately represent any number within the precision of the decimal format, whereas Float cannot accurately represent all numbers.

- Decimal used within financial applications that require a high degree of accuracy and easy to avoid rounding errors whereas Float used when you stores scientific numbers and for better performance.

- Performance of Decimals is slower than and float data types.

If you are new to SQL refer Below video for better Understanding.